DISCLAIMER

The draft recommendations contained herein were preliminary drafts submitted for discussion purposes only and do not constitute final determinations. They have been subject to modification, substitution, or rejection and may not reflect the adopted positions of IFATCA. For the most current version of all official policies, including the identification of any documents that have been superseded or amended, please refer to the IFATCA Technical and Professional Manual (TPM).

62ND ANNUAL CONFERENCE, Montego Bay, Jamaica, 8-12 May 2023WP No. 161Artificial Intelligence Development in ATM and Legal IssuesPresented by PLC & TOC |

Summary

The development of AI systems is continuous and fast, moving from human controlled systems to semi-automated and fully automated ones. It needs an investigation on new projects and validation activities regarding future ATC automated tools and the related implications on ATCOs responsibility. This working paper is intended to confirm and integrate the existing document WP No:92/2020 regarding AI and its policy.

Introduction

Explaining AI

1.1. Artificial intelligence (AI) refers to systems that display intelligent behaviour by analysing their environment and taking actions – with some degree of autonomy – to achieve specific goals (European Commission, 2018).

1.2. AI systems are software (and possibly also hardware) systems designed by humans that, given a complex goal, act in the physical or digital dimension by perceiving their environment through data acquisition, interpreting the collected structured or unstructured data, reasoning on the knowledge, or processing the information derived from this data and deciding the best action(s) to take to achieve the given goal. AI systems can either use symbolic rules or learn a numeric model. They can also adapt their behaviour, by analysing how the environment is affected by their previous actions.

1.3. As a scientific discipline, AI includes several approaches and techniques, such as machine learning (of which deep learning and reinforcement learning are specific examples), machine reasoning (which includes planning, scheduling, knowledge representation and reasoning, search, and optimization), and robotics (which includes control, perception, sensors and actuators, as well as the integration of all other techniques into cyber-physical systems) (High-Level Expert Group on Artificial Intelligence, 2018).

The Genesis of AI

1.4. The concept of AI dates back to the 1950s when Marvin Minsky and John McCarthy addressed their studies in the field of automation. They pioneered the idea of possible substitution with artificial machines for some duties and jobs, which were normally carried out by humans.

1.5. In a certain way, the first theoretical concept of AI can be associated with the possibility of manufacturing robots able to do some activities in an autonomous way, without the need for human presence.

1.6. The concept remained confined in pure theory for a long time because the lack of advanced technology could not make the transition to real application. The reality started only 40 years later when systems with high computing capacity became available in the research field.

1.7. The first technological inventions can be associated with machines, which appeared in the 90s that were able to execute some duties, such as simple manual work or mathematical calculations.

1.8. Nowadays, the high levels achieved in technology and computer science represent a real possibility for the development of AI in many fields, with machines able to execute complex duties after acquiring the necessary knowledge through observation, comparison, discrimination, and elaboration of huge amounts of data.

1.9. The creation of robots for the execution of manual work is just a first and simple way of applying the AI concept to technology.

1.10. At present, the biggest focus is the studying and making of artificial systems that can take to full automation of most human activities, such as but not limited to the automotive, medical sector, finance, social and humanistic environment, industrial production, management, scientific research, etc.

AI in ATM

1.11. The application of automated complex systems in Air Traffic Management (ATM) is very recent, and its development is slower and more limited than in other economic sectors.

1.12. The reason for this delay can be found in the peculiarity of ATM, in terms of a dynamic environment and the requirement for high levels of safety operations and security of data processing.

1.13. The first attempt at introducing AI in ATM can be referred to data exchange among different users where technology is used to predict the evolution of flights, both on the ground or airborne. In addition, it would disseminate data with high precision, taking into account many physical variables and airspace architecture.

1.14. The development of this kind of system started to involve other aspects of the ATM environment, especially those duties normally performed by ATCOs and pilots.

1.15. At present, the level of automation is still limited to a few tasks and is often represented by possible solutions suggested to the operator who has the final decision on the problem, such as coordination of levels and times, rerouting in case of airspace restrictions, etc.

1.16. IFATCA already has policies in the Automation field, both on the technical and human factor side (IFATCA TPM, 2022: WC 10.2.5).

1.17. These first applications are just the prelude to achieving future evolution into fully automated systems, where the figure of the operator will be more similar to a system engineer, much different from today’s concept of ATCO.

Figure 1. Transition in ATCO’s duties

1.18. The transition from basic data processing systems to fully automated ones is characterised by an intermediate step described as “semi-automated systems”.

1.19. At this level of automation, one or more options are suggested to the operators and represent an initial intrusion into human activities that can limit or condition the final decision of the operator, even if the process is still under human control.

Discussion

In this section, we look over some projects that use the technology of AI systems in ATM, with expected future development into fully automated systems.

MAHALO

2.1. Modern ATM via Human/Automation Learning Optimization (MAHALO) is a SESAR research project conducted by a consortium formed by Deep Blue, Center for Human Performance Research (CHPR), Delft University of Technology, and Linköping University and the Swedish Civil Aviation Administration.

2.2. The goal of this project is to identify and create an AI-based conflict detection and resolution tool.

2.3. The simulation environment recreates realistic scenarios, in which ATCOs experience different levels of transparency and conformance; the research will measure the best trade-off between those two concepts and make conclusions according to it.

2.4. The new tools should provide operators with the ability not only to identify conflicts but also to give possible solutions to them. The suggested actions will derive from available data and learned lessons compared to similar air traffic situations and environments.

2.5. At this stage, the management of traffic and the final decision is still fully under human control and responsibility; the level of impact on the ATCO job will depend on how much these new tools can influence and/or limit its actions.

2.6. It is not difficult to imagine that future development and application of this kind of software could potentially aim to achieve full operational control.

2.7. At that stage the systems must demonstrate they have reached the knowledge and capability to handle traffic situations, both normal and critical, assuring high standard levels in terms of safety and capacity (MAHALO Project, n.d.).

ARTIMATION

2.8. Artificial intelligence and Automation to Air Traffic Management Systems (ARTIMATION) is a SESAR research project managed and developed by a consortium formed by Mälardalen University, Deep Blue, École nationale de l’aviation civile (ENAC) and Sapienza University.

2.9. In Air Traffic Management, the decision-making process is already associated with AI. The algorithms are meant to help ATCOs with daily tasks, but they still face acceptability issues. Today’s automation systems with AI/Machine Learning do not provide additional information on top of the Data Processing result to support its explanation, making them not transparent enough. The decision-making process is expected to become understandable, through a comprehensible process.

2.10. ARTIMATION’s goal is to provide a transparent and explainable AI model through visualisation, data-driven storytelling, and immersive analytics. This project will take advantage of human perceptual capabilities to better understand AI algorithms with appropriate data visualisation as a support for explainable AI (XAI), exploring in the ATM field the use of immersive analytics to display information. ARTIMATION will disseminate every project concept and outcome to everyone who showed interest in the project (Artimation, n.d.).

2.11. The project assessed two use cases: the Conflict Detection and Resolution visualisation tool, and the Delay Prediction tool. What is better, an explanation through an interface or an algorithmic explanation? Is Explainable AI useful in training? These questions are going to be answered in the final dissemination events.

REMOTE and VIRTUAL TOWER (RVT)

2.12. There are many realities and projects already in place, regarding remote tower operations. Many more are expected to start, in the near future. In particular, IFATCA has a dedicated task force for this topic. Many documents have been published and others will be produced in the future.

2.13. After a few years of research and validation activity, this new concept of control tower is live in many countries mainly located in Europe, and other project and validation activities in North America and the Middle East.

2.14. At first, the idea of a remote tower was inspired by the need to give ATC service to airfields located in remote geographical areas with very low traffic volumes. But soon the concept became adaptable also to busier aerodromes, considering it as a possible solution to visibility and detecting hazards from conventional towers at some larger airports.

Figure 2. Remote tower (Leonardo)

2.15. The whole concept is based on the positioning of sensors and cameras on the airfield that can recreate the real situation and environment, to a virtual operation room that can be located anywhere. Associated with the remote tower project are many other research activities that are considered complementary to a complete digitalization of towers.

2.16. The correlation between AI and Remote and Virtual Tower (RVT) can be identified in the AI systems that can be implemented in the RVT concept and that can assist and make tower operations safer and more efficient (IFATCA, 2019).

2.17. For example, a ground AI system could assist the ATCO to identify the better way to manage ground operations, providing the best solutions for parking activities, taxiing, sequence, etc., taking into consideration given data like slot times, works in progress, handling availability, weather conditions and other information.

2.18. The best solutions can be proposed to the ATCO who can verify them and decide if acceptable; the instructions to the aircraft could be also given using a data link connection, avoiding voice communications and frequency overload or misunderstanding.

Figure 3. Remote tower – Frequentis TowerPad (Frequentis)

2.19. Other AI solutions could be also related to camera and weather software that can analyse images and data, determining the real possible impact of detected hazards or weather forecasts on operations.

Possible future applications

2.20. The introduction of AI can involve many aspects of ATM. This would allow the aviation sector to move from fully human-controlled operations to more automated systems.

2.21. In particular, from an ATC point of view, the new technologies focus on the management of flights, both on the ground and in the air.

2.22. The use of digital connections, cameras, sensors, voice recognition systems and other devices is pushing the industry to move from the traditional concept of control towers to remote ones, where physical location is not important anymore.

2.23. The same evolution can be found already and is expected to improve even more for en-route traffic, where automated data exchange is already in place, but future applications will also allow the management of flights at a dynamic level. IE: optimised climbing and descending, routings, separation, avoiding actions for any particular reason or restriction, etc.

2.24. At present, most studies are concentrated on single aspects. This is because it is necessary to reach the minimum standard for safe and acceptable operations, before considering potential implementation.

2.25. In the next phase, each single new technology will be integrated into a complex system that will be able to manage the traffic with the same standards, but at a wider scope.

2.26. We must expect that in the medium/long term, automatic systems will increasingly assist managing aircraft from the apron to the runway, with tower controllers mainly acting as supervisors, ready to intervene in case of disruption or unexpected situations.

2.27. The same will happen at area control level. New systems will be able to manage the traffic in some phases of the flight, optimising the use of aircraft and airspace.

Expected finalisation of projects

2.28. The industry is pushing hard for the development of new technologies and systems because it is desirable to create new ways of working in order to find new market segments and justify their investments.

2.29. ANSPs at the same time are interested in new technologies that can help to reduce costs in the long term, through the elimination of logistic limits and the number of employees.

2.30. This interest in new technologies led to new collaborations among all aviation stakeholders, in order to reach a high level of automation and digitalization within a few years.

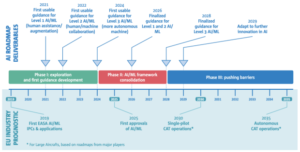

2.31. In parallel with the evolution of commercial air transport as forecasted by EASA in its AI roadmap, the full automation of ATC systems could be a reality by 2035 (European Aviation Safety Agency, 2020).

Figure 4. EASA AI Roadmap 1.0

2.32. Even if this deadline is not agreed upon at many levels to be feasible, future decades will be characterised by the development of new technical solutions, architectural concepts, studying and validation projects, all addressed to reach the final goal of complete digitalization and automated systems.

Pros and cons of AI Systems in ATM

2.33. Just like in any other sector where changes, new working concepts and systems are deployed, the introduction of automation in ATM with the development of machines and applications able to handle some duties once performed by humans can be a positive aspect of technological evolution. A critical analysis must be done on the opportunity level.

2.34. In particular, a basic distinction must be made between systems that provide users with tools which help them to carry out some tasks without limiting or excluding human control, from those implemented to substitute the operators in part or completely in their job.

2.35. The benefits expected from the digitalization of ATM are mainly related to operational issues such as flight efficiency, improved strategic planning, enhanced trajectory prediction and fuel efficiency, but also financial ones with particular attention to cost reduction figures.

2.36. The introduction of technology in the last three decades has always been perceived as an advantage to human work, offering new tools and resources able to create better working conditions and relief from heavy and monotonous tasks.

2.37. With the incredible speed in technological development reached in the last years, the objectives of modernization and digitalization do not aspire only to reduce the impact of work on human operators but to reach such levels of imitation and decision-making to substitute the human element in the process, with the final goal to increase safety, productivity and reliability.

2.38. At present, the introduction of AI systems is simply confined to the identification and development of new concepts and tools that can assist operators, thus making their tasks easier to carry out, in a safer and more efficient environment.

2.39. Some of the main controversial issues regarding automation at this technological stage are mainly related to the level of influence on the human aspect and the way they work; it is in fact the transparency of processing data and the outcome deriving from the used algorithm some of the main issues that are discussed nowadays when dealing with AI applied to ATM.

2.40. Another critical aspect is the management of contingency situations. This is because the high level of automation can make it difficult, if not impossible, to take over the traffic situation in case of system failure, no matter the experience, training and procedures that could be in place.

2.41. When on duty, it is a widely accepted idea that operators shall rely on the systems the more they perform automatic tasks or give assistance to system users. In particular, ATCOs shall have knowledge of the way systems work, elaborate data, provide suggestions or deliver information.

2.42. It is essential that ATCOs have the possibility to assess in time whether the given AI solutions are incorrect or not the best ones, in order to intervene promptly and with success.

2.43. In this new scenario, the main consequences can be identified in the reduction of working positions, because partially automated, lack of adequate training of personnel and knowledge of the used systems, high traffic loads that can’t be easily and safely managed by human operators in case of disruption, and the lack or inadequate legislation in case of use of AI systems with regard to the responsibility of operators.

Definitions of Responsibility and Liability

2.44. The term responsibility can have different meanings:

- the state of being the person who caused something to happen;

- a duty or task that you are required or expected to do;

- the state of having the job or duty of dealing with and taking care of something or someone;

- the quality of a person who can be trusted to do what is expected, required, etc.

2.45. The most generic definition is that ‘there arises an obligation by the Law of Nature to make reparation for the damage, if any be done’ (Pellet, 2007).

2.46. The term liability describes the state of being legally responsible for something (Merriam-Webster (n.d.)).

Legal Impact on AI Operators

2.47. The starting point of the legal analysis is the application of AI in developing legal norms around software and data. In legal terms, AI is a combination of software and data. The software (instructions to the computer’s processor) is the implementation in code of the AI algorithm (a set of rules to solve a problem). What distinguishes AI from traditional software development is, first, that the algorithm’s rules and software implementation may themselves be dynamic and change as the machine learns; and second, the very large datasets that the AI processes (what was originally called big data). The data is the input data (training, testing and operational datasets); that data is processed by the computer; and the output data (including data derived from the output) (Kemp, 2018).

2.48. AI development is leading governments and policymakers around the world to grapple with what AI means for law, policy and regulation and the necessary

technical and legal frameworks.

2.49. There are legitimate concerns about the intentional and unintentional negative consequences of using AI systems as outlined by the Assessment List for Trustworthy AI (ALTAI). Accountability calls for mechanisms to be put in place to ensure responsibility for the development, deployment and/or use of AI systems – risk management, identifying and mitigating risks in a transparent way that can be explained to and audited by third parties.

Transparency and explainability

2.50. One solution to the question of intelligibility is to try to increase the technical transparency of the system so that experts can understand how an AI system has been put together. This might, for example, entail being able to access the source code of an AI system. However, this will not necessarily reveal why a particular system made a particular decision in a given situation. An alternative approach is an explainability, whereby AI systems are developed in such a way that they can explain the information and logic used to arrive at their decisions (UK Parliament, 2018).

2.51. The transparency of an AI system is useful for people to understand how the system reacts in any situation and to show where it can be improved. This is something that helps to update the existing code.

2.52. Explainability is needed in the event that a human needs to intervene and take control in the middle of an ongoing situation. The human needs understanding in order to be aware of the reason the AI is executing a particular decision.

Responsibility of ATCOs using AI systems

2.53. AI may be implemented up to different levels in the ATM system. If we speak about a human-centric system, of course, it’s the human that is responsible for the decisions this particular human has made. But the AI systems may as well be semi-automated or fully automated, so there needs to be a level of responsibility for ATCOs proportionate to the level of actual control that the human has.

2.54. When developing a system that uses AI to support ATCO’s duties, there shall be a revision of what the duties of the person are and transparency in understanding what the machine is doing.

2.55. In particular, the responsibility of the operator shall be limited to the effective level of control and decision-making, which is influenced by the type and level of automation.

2.56. Deployment of AI technology may also lead to the involvement of more parties/entities who can be held liable if something goes wrong. Such as the programmers who coded the AI system and/or the ANSP who introduced the system and assured that it meets the required level of safety.

2.57. One possibility is to apply Article 12 of the United Nations Convention on the Use of Electronic Communications in International Contracts. This states that a person (whether a natural person or a legal entity), on whose behalf a computer was programmed, should ultimately be responsible for any message generated by the machine. Such an interpretation is consistent with the general principle that the user of a tool is responsible for the results obtained by the tool as the tool has no independent volition of its own. In this case, the tool is AI and any liability arising from its use lies with the ‘user’. A ‘user’ can be the developer, the implementer or approver of the AI. This permits the responsibility for the AI, or any liability arising from its use, to expand beyond just the end user (Bühler, 2020).

Levels of implementation of AI systems

2.58. Transitioning from a fully human-controlled system to a partially controlled (semi-automated) and fully automated system also requires changing ATCO’s duties and liabilities.

2.59. The semi-automated system is when the AI gives one or more possible solutions in any situation and ATCO has to decide which one is the best to be applied. Then the person turns from the central driver of this system into a part of it. However, for such a system to work, the ATCO shall be offered the opportunity to understand the reasoning behind the decision, presented by AI. Innovative techniques should be implemented to develop auditable machine learning algorithms, in order to explain the rationale behind algorithmic decisions and check for bias, discrimination and errors.

2.60. In a fully automated system, the person is more like an operator who supplies the system with data and monitors its correct operations. So far automation has proved it can increase airspace capacity by allowing for more complex and higher-level services. In this concept a major question is, if the system can deal with a greater amount of traffic and complexity, how is the person able to monitor that? This may lead to the inability of the person to seize all the functions and to have proper situational awareness. The result will not be a possibility for the person to be the fallback of such a system.

Conclusions

3.1. These requirements represent a limit to the application of fully automated systems in ATM and the transition period will be probably long and more complicated than in other sectors.

3.2. We must be ready if this change becomes real and most of today’s ATCOs’ tasks will be executed by automation instead of human operators, even if their presence will still be necessary in order to verify the correct functioning of systems and intervene in case of disruption.

3.3. There are existing policies in the IFATCA Technical and Professional Manual (TPM) regarding automation and artificial intelligence:

| WC 10.2.5 AUTOMATION / HUMAN FACTORSAutomation shall improve and enhance the data exchange for controllers. Automated systems shall be fail-safe and provide accurate and incorruptible data. These systems shall be built with an integrity factor to review and crosscheck the information being received.

The human factors aspects of Automation shall be fully considered when developing automated systems. Automation shall assist and support ATCOs in the execution of their duties. The controller shall remain the key element of the ATC system. Total workload should not be increased without proof that the combined automated/human systems can operate safely at the levels of workload predicted and be able to satisfactorily manage normal and abnormal occurrences. Automated tools or systems that support the control function shall enable the controller to retain complete control of the control task in such a way as to enable the controller to support timely interventions when situations occur that are outside the normal compass of the system design, or when abnormal situations occur which require non-compliance or variation to normal procedures. Automation should be designed to enhance controller job satisfaction. The legal aspects of a controller’s responsibilities shall be clearly identified when working with automated systems. A Controller shall not be held liable for incidents that may occur due to the use of inaccurate data if he is unable to check the integrity of the information received. A Controller shall not be held liable for incidents in which a loss of separation occurs due to a resolution advisory issued by an automated system. Guidelines and procedures shall be established in order to prevent incidents occurring from the use of false or misleading information provided to the controller. |

| AAS 1.20 ARTIFICIAL INTELLIGENCE AND MACHINE LEARNING IN ATCArtificial Intelligence and/or Machine Learning-based systems should only be implemented as Decision Support Systems and shall not replace the decision of the ATCO.

Where an ATCO is responsible for decision making, and in the event that system tools fail or are not available, the ATCO should always have the capacity to safely manage their area of responsibility. |

3.4. While we do confirm the validity of IFATCA policies with reference to the AI implementation and automation quoted above, we also want to be prepared for the future scenario when digitalization will give options to fully autonomous system operations.

3.5. In particular, this long-term concept is more important when dealing with the legal aspects of ATCO’s responsibility.

3.6. Considering the long process in most countries to change laws and regulations that can last several years, it is essential that new responsibility limits and definitions are identified and addressed to institutions and governments.

3.7. Appropriate changes in national legislation should be suggested and promoted to strengthen the concept that ATCOs’ responsibility must be lowered and proportional to the impact of automation and their control margin during operations.

Recommendations

4.1. It is recommended that the following be accepted as policy and added to AAS 1.20 ARTIFICIAL INTELLIGENCE AND MACHINE LEARNING IN ATC:

− The introduction of AI systems shall be tested and validated with the assurance of appropriate standard levels of operations in terms of safety, security, robustness, and reliability.

− In case of disruption and in addition to backup and continuity systems, appropriate procedures and training shall be put in place to assist ATCOs in emergency situations.

− With special attention to airspace capacity, traffic complexity and available backup systems, a safety risk assessment shall be carried out to determine the possibility for ATCOs to intervene in case of disruption.

− IFATCA and MAs shall undertake actions to raise awareness at an international and national level about the impact of automation in ATM and hasten for appropriate legal changes in ATCO’s responsibility.

− ATCO’s responsibility shall be proportionally decreased in accordance with the level of automation and their ability to intervene and control automated systems.

References

1. European Commission. (2018). Communication from the Commission to the European Parliament, the Council, the European Economic and Social Committee and the Committee of the Regions: Artificial Intelligence for Europe [COM (2018) 237 final]. Retrieved from https://eur-lex.europa.eu/legal-content/EN/TXT/HTML/?uri=CELEX:52018DC0795

2. High-Level Expert Group on Artificial Intelligence. (2018). Draft ethics guidelines for trustworthy AI. Retrieved from https://ec.europa.eu/futurium/en/system/files/ged/ai_hleg_definition_of_ai_18_december_1.pdf

3. Mahalo Project. (n.d.). Mahalo. http://mahaloproject.eu/

4. Artimation. (n.d.). Overview. https://www.artimation.eu/overview/

5. Artimation. (n.d.). Final event. Retrieved from https://www.artimation.eu/final-event/

6. International Federation of Air Traffic Controllers’ Associations. (2019, August 29). Remote towers guidance. Retrieved from https://www.ifatca.org/remote-towers-guidance/

7. European Union Aviation Safety Agency (EASA). (2020). EASA artificial intelligence roadmap 1.0. Retrieved from https://www.easa.europa.eu/en/document-library/general-publications/easa-artificial-intelligence-roadmap-10

8. Pellet, A. (2007). The definition of responsibility in international law: Some practical issues. Retrieved from https://prawo.uni.wroc.pl/sites/default/files/students-resources/PELLET%20The%20Definition%20of%20Responsibility%20in%20International%20Law.pdf

9. Merriam-Webster. (n.d.). Liability. In Merriam-Webster.com dictionary.

https://www.merriam-webster.com/dictionary/liability

10. Kemp, R. (2018). Legal Aspects of Artificial Intelligence (v2.0). Mondaq.

https://www.mondaq.com/uk/new-technology/739852/legal-aspects-of-artificial-intelligence-v20

11. UK Parliament. (2018). AI in the UK: Ready, willing and able? House of Lords Select Committee on Artificial Intelligence. Retrieved from https://publications.parliament.uk/pa/ld201719/ldselect/ldai/100/10007.htm

12. Bühler, C. F. (2020). Artificial Intelligence and Civil Liability. Journal of Intellectual Property, Information Technology and Electronic Commerce Law, 11(2), 141-155. https://doi.org/10.2139/ssrn.3645305

13. Eurocontrol. (2020). The FLY AI report 2020/9. https://publish.eurocontrol.int/sites/default/files/2020-09/FLYAI%20report%20%232.pdf