DISCLAIMER

The draft recommendations contained herein were preliminary drafts submitted for discussion purposes only and do not constitute final determinations. They have been subject to modification, substitution, or rejection and may not reflect the adopted positions of IFATCA. For the most current version of all official policies, including the identification of any documents that have been superseded or amended, please refer to the IFATCA Technical and Professional Manual (TPM).

51ST ANNUAL CONFERENCE, Kathmandu, Nepal, 12-16 March 2012WP No. 87Determining Operations Readiness of Automated ATM SystemsPresented by PLC and TOC |

Summary

Modern ATM systems are more and more complex. Safety critical tasks as Radar Data Processing or Flight Data Processing rely on complex software packages while the increasing volume of air traffic makes the ATM system more and more dependent on such software. As a consequence, a software failure may lead to a catastrophic situation. Therefore, new ATM systems must be carefully designed, tested and validated before being considered ready for implementation. This paper discusses the concept of readiness, the development process, and the need of controller involvement in the development of automated ATM systems.

This paper proposes new Policy on this subject.

Introduction

1.1 Air Traffic Control systems have evolved to be more and more technology dependent. Procedural control gives way to radar control and manual flight data processing is replaced by computerized systems.

1.2 An extension in the automation level increasing the complexity of the ATM system is expected in the future. SESAR acknowledges this stating:

“to accommodate the expected traffic increase and complexity, an advanced level of automation support for the humans will be required”.

In a similar way we can read about NEXTGEN:

“Air traffic controllers will become more effective guardians of safety through automation (…)”

1.3 Experience shows that the implementation of safety-critical software is a complex task. There is evidence showing that new systems have been implemented before being fully ready and problems occurred when issues with the software were subsequently discovered.

1.4 This paper studies the way complex systems are developed and validated until they are ready for its use, analyzes some practical examples and proposes new Policy.

Discussion

2.1 Different approaches to developing complex systems – Development life cycles

2.1.1 Development life cycles are characterized by the way they organize the interactions between technical development processes. Three basic types of life cycle models may be distinguished:

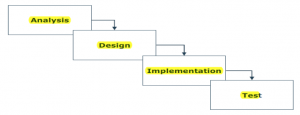

- Waterfall Model

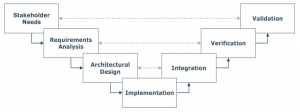

- V-Model

- Spiral Model

2.1.2 A waterfall model defines a sequential development approach. The number of steps may differ, but in general the sequence contains the following processes: analysis, design, implementation, and test. In some cases it is preceded by a process such as feasibility analysis or requirements capture, and in some cases it is followed by a process such as maintenance or deployment.

2.1.3 There are several weaknesses in this approach. First, because the waterfall model only considers integration and test issues at the end of the life cycle, there is a risk that problems will be discovered too late in the development. Secondly, the design has to be finalized before specific implementation problems are discovered. Coping with these problems late in the development is likely to incur delays and increase cost (e.g. redesign, requirements change). Therefore, the waterfall model can only be used for projects with limited risk in terms of development and final product acceptance, e.g. routine development of conventional solutions.

2.1.4 A V-model defines a development approach that connects design processes with verification processes. At the start of the project, the stakeholder needs are identified to ensure that the final product is valid for its intended environment and that the product will meet the stakeholder expectations. Early in the project it is verified that requirements are testable, and test requirements are written to prepare for verification. The product is designed taking integration aspects into account and is integrated in accordance with its design.

2.1.5 The V-model can be viewed as a modified waterfall model, because it contains the same basic processes; adding only relationships. In this way it tries to discover problems early in the project when it is less costly to solve them. A weakness of this model is that it does not provide guidance on how to deal with unforeseen properties of the product.

2.1.6 A spiral model defines an iterative development approach. Each of the iterations deliver a prototype of the product of increasing maturity by performing activities such as defining objectives, analysing risks, planning the development, and modelling the product. After the final prototype is ready, the real product is developed. This approach allows the customer or stakeholders to provide feedback early in the project.

2.2 Verification, validation and certification

2.2.1 In the development of a complex system, specifically software, it is important to be able to determine if it meets specifications and if its outputs are correct. This is the process of verification and validation (V & V) and its planning must start early in the development life cycle.

2.2.2 Software development has evolved from small tasks involving a few people to enormously large tasks involving many people. Because of this change, V & V has similarly also undergone a change. It became necessary to look at V & V as a separate activity in the overall software development life cycle. The V & V of today is significantly different from the past as it is practiced over the entire software life cycle. It is also highly formalized and sometimes activities are performed by organizations independent of the software developer.

2.2.3 According to the definitions by the IEEE Standard Glossary of Software Engineering Terminology, verification simply demonstrates whether the output of a phase conforms to its input as opposed to showing that the output is actually correct. Verification will not detect errors resulting from incorrect input specification and these errors may propagate without detection through later stages in the development cycle. It is not enough to only depend on verification; Validation is the process to check for problems with the specification and to demonstrate that the system operates correctly. Certification is defined as a written guarantee that a system or component complies with its specified requirements and is acceptable for operational use. The certification process also starts from the beginning of the life cycle and requires cooperation between the developer and regulatory agency.

2.2.4 An important aspect of certification is that it does not prove that the system is correct. Certification only proves that a system has met certain standards set by the certifying agency. The standards show that a product has met certain guidelines, but it does not mean that the system is correct. Any problem with the system is ultimately the responsibility of the designer and manufacturer, not the certification agency.

2.2.5 The safety case is an important document used to support certification. It contains a set of arguments supported by analytical and experimental evidence concerning the safety of a design. It is created early in the development cycle and is then expanded as safety issues arise. In the safety case, the regulatory authority will look to see that all potential hazards have been identified, and that appropriate steps have been taken to deal with them. The safety case must also demonstrate that appropriate development methods have been adopted and that they have been performed correctly. Items that should be in the safety case include, but are not limited to: specification of safety requirements, results of hazard and risk analysis, verification and validation strategy, and results of all verification and validation activities.

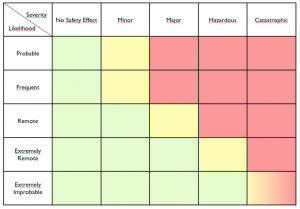

2.2.6 As a part of the safety case, systems used in CNS/ATM must pass a risk assessment in line with ICAO Doc4444. In Europe this need is explicitly requested in ESARR 4 while ESARR 6 requires that ANSPs define and implement a software safety assurance system to deal specifically with air traffic management network software related aspects. A risk assessment must quantify risks and evaluate their consequences as can be seen in the following Risk Assessment Matrix.

In the matrix, the likelihood of an event, defined in terms of probability of occurrence, is considered against its potential severity, which goes from an accident (Catastrophic) to a slight increase in controller workload.

- High Risk (Red): Tracking in a Hazard Tracking Risk Resolution System is required until the risk is reduced or accepted at the appropriate management level.

- Medium Risk (Yellow): Acceptable with review by the appropriate management level. Tracking in a Hazard Tracking Risk Resolution System is required.

- Low Risk (Green): Acceptable without review.

- A special case is considered when the Likelihood is Extremely Improbable and the Hazard Severity is Catastrophic: it is considered Medium Risk but unacceptable with single point and common cause failures.

2.3 Human Factor aspects

2.3.1 Experience gained from the design of large power plants, chemical processes, aircraft, and other large and complex systems has shown the importance of integrating the system inhabitants, the humans, from the beginning and throughout.

2.3.2 Two concepts are of key importance in the assessment of any given system:

- Usability: Refers to whether a system is easy to use, easy to learn and efficient for the human to apply for performing a certain task.

- Acceptability: Refers to whether the humans coming into contact with the system accept its existence and the way in which it operates.

2.3.3 Integrating human factors in the system life cycle will maximize the acceptability of the system and considerably improve its usability.

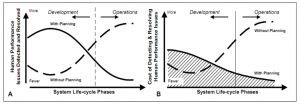

2.3.4 With good planning for human factors integration and a qualified application of philosophies, guidelines and techniques, most of the problems related with human performance will be detected in the early phases of the system development. This situation, shown in Figure A, will support an early detection of the problems and as a consequence lower the human performance problems later in the system life cycle, such as during operation. If no attention is paid to these issues early in the development, as depicted with a dotted line in Figure A, the problems are merely postponed to the later stages of the system life-cycle.

2.3.5 While it may appear to be costly to solve the human performance problems early in the development, it is actually cheaper in the long run as can be seen from Figure B. If a problem is left unresolved until the system has been brought into operation, it will not only pose a cost in terms of lower performance, but the cost of mending it is much higher. If the problems are left to the operation of the system, the cost of fixing them will increase throughout the lifetime of the system.

2.4 Integrating human factors in the system life cycle

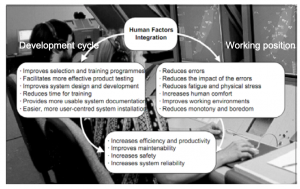

2.4.1 Integrating human factors in the system design will result in benefits as shown in the figure:

2.4.2 A well-known problem connected with the introduction of a new system (or even changes to an existing system) is that people in the workplace may feel threatened, alienated or otherwise uncomfortable with the change. It is therefore crucial to manage the change and to make sure that its introduction is successful. A number of key activities are related to such implementation:

- Provide help to face up to change:

It is important to help people to face up to the change and take them towards an acceptance of the need to change. Experience shows, however, that approximately 10% of the people in an organization will have serious doubts concerning the benefits of the change process and will resist it. - Communicate as you have never communicated before:

Communication becomes a key activity in the change process. It is important to inform, to the maximum extent possible, in order to avoid uncertainty among employees. Rumours can be detrimental to the change process. It is equally important to receive feedback during the change process to obtain an accurate feeling of how things are being received and to improve implementation. - Gaining energetic commitment to the change:

In the short-term, commitment can be gained from a focus on the survival of the organization. In the long-term the commitment has to come from system reward structures, employment policies and management practices. - Early involvement:

Early involvement of the people affected by the change will go a long way towards reducing behavioural resistance during implementation. It will considerably reduce the amount of energy that has to be expended on coercion and power strategies to enforce compliance. - Turn perception of ‘threat’ into opportunity:

A change process is likely to be seen as a threat by people. Experience shows that an organization, which can change this perception so that the change is seen as an opportunity, is more likely to succeed. - Avoid over-organizing:

The change process should be well thought-out before it takes place, but it should be recognized that the process is very organic in nature. One should not stick to the plan just because it is there, but rather learn from the situations as they develop, a change process is a learning process. - Train for the change:

People’s perception of the change may transform significantly if they have an opportunity to understand better what will be required of them. Training sessions can significantly reduce the resistance to the change.

2.4.3 To integrate human factors the development team should include people with the following profiles:

- system developers, typically software engineers;

- end-users, usually the operational controllers, supervisors and maintenance engineers;

- managers responsible for directing the development process;

- human factors specialists, who participate to ensure the integration of human factors.

2.4.4 It is important that a person responsible for human factors is nominated for the system development and participates in the planning and management throughout the life cycle of the system. If the human factors specialist does not have a background in ATM, it is important than he or she receives proper training and exposure to an operational environment.

2.4.5 David Hopkin describes the objective of the specialist as follows:

“An important function of the human factors specialist during system design is … to ensure that designers understand the relevance of human factors contributions enough to allow the human factors recommendations to be integrated into the design process.”

2.5 Training

2.5.1 One of the most important aspects of a new system implementation is training. According to IFATCA POLICY on training, this situation is covered by “CONTINUATION TRAINING REQUIREMENTS” (page 4 3 A15) of the Manual, where three different types of Continuation Training are determined:

- Refresher Training: organised on a regular basis and forming part of a competency scheme (towards rating validation);

- Additional Training: organised when required, likely in conjunction with refresher training;

- Development Training: This is not site specific and aims to prepare an ATCO for new and/or extra tasks not reflected in the licence.

2.6 IFATCA Policy

The following policies are relevant to the subject:

| IFATCA Technical and professional manual, page 3.2.3.19ATS 3.19 CONTROLLER TOOLS:

Controller Tools (CTs) are functions of an ATM system that enhance a controller’s ability to meet the objectives of ATS. They provide information that assists controllers in the planning and execution of their duties, rather than dictating a course of action. IFATCA Technical and professional manual, page 4.1.2.7 WC.2.5. AUTOMATION / HUMAN FACTORS: Automation must improve and enhance the data exchange for controllers. Automated systems must be fail-safe and provide accurate and incorruptible data. These systems must be built with an integrity factor to review and crosscheck the information being received. The Human Factors aspects of Automation must be fully considered when developing automated systems. Automation must assist and support ATCOs in the execution of their duties. The controller must remain the key element of the ATC system. Total workload should not be increased without proof that the combined automated/human systems can operate safely at the levels of workload predicted, and to be able to satisfactorily manage normal and abnormal occurrences. Automated tools or systems that support the control function must enable the controller to retain complete control of the control task in such a way so as to enable the controller to support timely interventions when situations occur that are outside the normal compass of the system design, or when abnormal situations occur which require non- compliance or variation to normal procedures. Automation should be designed to enhance controller job satisfaction. The legal aspects of a controller’s responsibilities must be clearly identified when working with automated systems. A Controller shall not be held liable for incidents that may occur due to the use of inaccurate data if he is unable to check the integrity of the information received. A Controller shall not be held liable for incidents in which a loss of separation occurs due to a resolution advisory issued by an automated system. Guidelines and procedures shall be established in order to prevent incidents occurring from the use of false or misleading information provided to the controller. IFATCA Technical and professional manual, page 4.1.2.8 WC.2.7. WORKING WITH UNSERVICEABLE OR INADEQUATE EQUIPMENT: ATC equipment provided should include back-up secondary equipment on hot stand- by for use if the primary equipment becomes degraded. Controllers should be given initial and recurrent training in the degraded mode operations of their equipment. ATS management must ensure that ATS equipment is regularly maintained, by properly trained and qualified technical staff, to ensure its availability and reliability. ATS management must design adequate fault reporting procedures and publish required rectification times. Air Traffic Controllers should not use equipment that is known to be unserviceable, unreliable or inaccurate for the provision of services to air traffic. When designing and introducing new ATM-equipment the vulnerability and possible abuse of this equipment should be considered, and precautionary measures should be taken. IFATCA Technical and professional manual, page 4.1.2.9 WC.2.8. REGULATORY APPROVAL OF ATM SYSTEMS EQUIPMENT: MAs should encourage their State’s Regulatory Authority to play a role in the development and certification/ commissioning and oversight during the life cycle of air traffic control equipment IFATCA Technical and professional manual, page 4.3.2.4 TRNG.2.5. AUTOMATION CONTROLLER TRAINING: Controllers required to operate in an automated air traffic control system should receive relevant instruction in automatic data processing for ATC. Controllers should be involved in the specification, evaluation and implementation of an automated ATC system. Formal training should be established for all ATC personnel in the theoretical and practical procedures associated with the automated ATC system. The above training should be carefully integrated with the implementation of each stage of the automated ATC system. Whatever the ATC environment, controllers should receive suitable, regular training on the published back-up procedures which would be put into operation in the event of a system failure. The implementation of automated systems shall include sufficient training, including The Human Factors aspects of automation, prior to using new equipment. The level of training is a major factor in determining the level of traffic that can be safely handled until all controllers have gained enough hands-on experience. |

2.7 Examples of ATCOs co-developing the system – the cases of Slovenia and Switzerland

2.7.1 Recent implementation of new systems in several countries provides examples of the need for human factors integration.

2.7.2 In 2006 a major upgrade took place in Slovenia with the replacement of the FDPS. The new system, called KAMI, was introduced in 2008, but the development, as usual, did not finish with the implementation of the new system and improvements continued to be produced with the cooperation of controllers.

2.7.3 Slovenian ATCOS have always been heavily involved in all phases of development of both RDPS and FDPS since the start of ATM provision in Slovenia in 1992. ATCOs not only test and implement new releases of software but they also write the user requirements, so the system may be defined as “designed by ATCOs”. During the process of development the management chooses to stay aside voluntarily, having confidence that this cooperation between engineers, coders, project managers and end users is gradually increasing sector capacity at a reasonable cost.

2.7.4 In Switzerland, Geneva ACC switched to a strip-less system during 2005 and 2006. Instead of implementing the system in one go, the Swiss introduced this change in stages with the whole process taking seven steps over 18 months.

2.7.5 The cooperation between controllers and engineers to solve failures was excellent. Controllers were involved from the beginning with the specifications and development while engineers were always present and available during the testing. Having the engineers in the simulator meant they could understand the way controllers used the system and witnessed the problems that arose so controllers did not even need to write reports on errors and the risk of misunderstandings was fully avoided. Minor software changes were often available within 24 hours.

2.7.6 After the system or any of the stages were considered mature enough the validation process with the participation of the Swiss regulator started. Only after the system and the training of the ATC- staff to work with it was approved by the Regulator, was the “go decision” taken with the agreement of every stakeholder: the Regulator, ATC-management, safety experts from the ANSP, the staff that had tested the software in the simulator and the training staff.

2.8 Examples of ATCOs Implementing already developed systems

2.8.1 VAFORIT

2.8.2 In the beginning of 2011 the VAFORIT system was introduced at Karlsruhe UAC. VAFORIT contains a Radar Processing System developed by Raytheon as well as a new Flight Data Processing System, called ITEC, by INDRA, which also built the HMI.

2.8.3 The VAFORIT project started in the mid 90’s and the system was scheduled to be operational in 2004. The project was soon delayed so by 2003 the expected date for program completion was 2008. The project was not finally completed until 2011.

2.8.4 According to an engineer from INDRA, the key phases of the project were:

- System definition

- Development

- FAT: Factory Acceptance Testing

- SAT: Site Acceptance Testing

So the process, as a whole, can be seen as a waterfall model as described in 2.1.2.

2.8.5 SAT was scheduled to be the final process of development. But during that phase, in 2008, the system was rejected by the Air Traffic Controllers who had to learn VAFORIT in order to serve as instructors for the rest of the Karlsruhe controllers. Many hours of work had to be invested to partially redesign the system to the frustration of developers who saw how significant components of their work had to be thrown away. So the developing process, initially a waterfall model, was forced to change to a spiral model.

2.8.6 Previously only two former controllers had been involved in VAFORIT, but now a task force including 5 operational controllers, plus one flight data specialist, staff from the OPS section, software developers, etc were working on VAFORIT. The Task forces’ first task was to write an Operational Concept that led to the so called Operational Change Requests (OCRs). Development went on with the commitment of these controllers until the implementation of VAFORIT and even now the Task Force is still working in developing the already implemented system.

2.8.7 One mistake was retrospectively acknowledged by the developers: they had initially designed the system without participation of the users, but in the end their opinion was needed. According to the engineers, a system needs a double validation: a technical one and a final user one. But the users’ advice and their involvement is necessary in the design and development phases to create a valuable product. Users’ lack of involvement can lead to a successful technical validation but rejection in the validation by the final users. VAFORIT, unlike the Swiss system, was a technological leap in one big step. In the engineer’s opinion the users felt the system as something foreign to them that dramatically changed the way they worked, and they rejected it.

2.8.8 The conclusions of the engineers after the validation experience concurs perfectly with the prediction of better usability and acceptability by the Human Factors Integration theory. Engineers said that operational personnel must participate from the very beginning, during the design process, because of the following benefits:

- The system complies with user requirements from the very beginning, avoiding the need of partial redesigns. (Usability)

- The final users are involved in the development so they feel like stakeholders and are better prepared for change. (Acceptability)

2.8.9 ERAM

2.8.10 ERAM (En Route Automation Modernization) is a project developed by Lockheed Martin for the FAA to replace the old system, HOST. Research to justify the need of such a program began in 1998; Lockheed Martin was awarded a sole-source contract by the FAA to develop ERAM in February 2001. ERAM should have been operational and running in the 20 American continental control centers by the end of 2010 but the program has suffered significant delays.

2.8.11 ERAM has already been tested in some facilities but it was not well received by controllers who perceived it as not ready for use. NATCA pointed out that ERAM was a good concept but not yet ready to be implemented.

2.8.12 An assessment from MITRE estimates that the operational readiness will be probably delayed until August 2014 with an additional cost of $ 330 million. The FAA has already spent $ 1.8 billion on ERAM. The MITRE report confirms the operational problems:

- “operations were not sustained for more than a week at a time, eroding site confidence and trust”;

- “large number of problems being discovered in operational use”;

- “new releases often exhibited new problems on top of old problems that were not yet fixed”.

2.8.13 NATCA has committed to cooperate as much as possible in the development of ERAM. In a similar way to what had happened in Germany with VAFORIT, controllers have become more and more involved. This involvement is also in line with a recommendation from the MITRE report:

“involvement of ATC workforce as early as possible helps identify usability and operational issues”.

2.8.14 Interestingly, the FAA was aware of the existence of similar problems in the STARS system for terminal facilities and in the ATOP system for oceanic control. According to a report from the U.S. Department of Transportation:

“FAA stated that operational staff will continue to be involved in the validation of all security documents including the ERAM Security Risk Assessment Plan (…). This is important given that it is historically more expensive to add security features later than it is to add them during the system design phase.”

This recommendation is given for security but nothing is said about safety and control purposes.

2.9 Comparison

2.9.1 The examples above illustrate the Human Factors Integration case. The Americans and Germans developed their own systems without controller involvement, while in Switzerland and Slovenia controllers were present from the beginning of the projects, writing the user requirements of their own systems, co-developing them alongside engineers and programmers and finally testing and validating them. As predicted by theory, lack of integration of Human Factors drove to a long and expensive redesign of the system.

2.9.2 Interestingly, controllers and engineers having cooperated in the development of a system always agree that they have learnt much about each other. A Spanish controller who worked alongside engineers said:

“at the beginning controllers and engineers were using the same words but not the same language. We needed some time until we began to understand each other needs and way of thinking”.

This controller as others in a similar situation concurs with the Human Factors Integration theory in the need for remaining operational while cooperating in this kind of project:

“what you add to the project is your knowledge of the real needs of controllers working in the operations room. You need to remain operational to avoid losing the picture”.

2.9.3 Once the cooperation is established, the routine of work seems to be common: the controllers write the requirements; the engineers build the system and finally the controllers validate the system; their comments being the feedback that engineers need to work following the spiral model of development. The benefits of the integration of Human Factors is perfectly described by a member of the Slovenian team of development:

“exact and precise definition phase with clear goal in mind saves a lot of work, nerves and money”.

Conclusions

3.1 Development of complex systems, such as ATM systems, is a complicated task. The best way to address this is through an iterative process described as spiral model to integrate the view of every stakeholder.

3.2 Verification is the process to determine if a system or a part of a system delivers the expected outputs according to the inputs. Validation checks the specifications and the proper operation of the system. Certification is a written guarantee that a system or component complies with its specified requirements and has been deemed acceptable for operational use by the appropriate authority. A safety case is part of the certification process.

3.3 Human factors elements must be integrated during the development of the system. To achieve this, the development team should include system developers, operational controllers as end- users, managers and human factors specialists.

3.4 Controllers and developers think differently and speak different languages. Common ground shall be established in order to ensure that cooperation runs smoothly.

3.5 Early involvement of the end-users maximizes the acceptability of the system and improves its usability. There is a clear difference in the attitude of controllers being involved in the development of a system from the beginning of the project and the attitude of their colleagues having to deal with a nearly finished system. The first ones feel as responsible for the system as the code developers and they emphasize the good relationship between controllers and engineers when interviewed, while the second ones usually talk about the difficulties dealing with a system developed without the final user in mind.

Recommendations

It is recommended that;

4.1 IFATCA Policy is:

In the design, development and implementation of new ATM systems, operational controllers should cooperate with software developers, system engineers and human factors specialists. Their role should include:

- Establish user requirements.

- To participate in the risk assessment process.

- To validate the system.

- To provide feedback in the further development of the system.

and is included in the IFATCA Technical and Professional Manual.

4.2 IFATCA Policy on page 4.3.2.4 of the IFATCA Technical and Professional Manual:

Controllers should be involved in the specification, evaluation and implementation of an automated ATC system.

Is deleted.

References

European ATM Master Plan, page 141.

FAA’s NEXTGEN implementation plan, page 20.

NLR National Airspace Laboratory: Role of requirements in ATM operational concept validation 2007.

Verification/Validation/Certification 18-849b Dependable Embedded Systems Carnegie Mellon University.

ICAO Doc4444 / ATM501 The need for a safety assessment is in section 2.6.1.

ESARR 4: Risk assessment and mitigation in ATM. See section 5.

ESARR 6: Software in ATM systems. See section 2.

FAA: NAS Modernization System Safety Management Program.

EUROCONTROL: Human Factors in the Development of Air Traffic Management Systems, 1998.

EUROCONTROL: The Human Factors Case: Guidance for Human Factors Integration, 2007.

V. David Hopkin: Human Factors in Air Traffic Control; Taylor & Francis Ltd, 1995.

Press release from DFS: https://www.dfs.de/dfs/internet_2008/module/presse/englisch/press_service/press_information/2011/new_ats_system_p1_vaforit_at_uac_karlsruhe_31_january/index.html

FAA Report Number AV-2005-066; June 29, 2005.

MITRE: Independent Assessment of the ERAM Program. The report was not initially intended for public release but is currently released and can be downloaded from: https://assets.fiercemarkets.com/public/sites/govit/mitreindependentassessment_eram.pdf