59TH ANNUAL CONFERENCE, Singapore, 30 March – 3 April 2020WP No. 91Big DataPresented by TOC |

| IMPORTANT NOTE: The IFATCA Annual Conference 2020 in Singapore was cancelled. The present working paper was never discussed at Conference by the committee(s). Resolutions presented by this working paper (if any) were never voted. |

Summary

Collecting and analysing information for research needs has always been a major issue in human activities. New technologies and economic globalization resulted in a continuous increase of available data that can be compared, processed and analysed to improve efficiency and knowledge in any possible environment. Big Data analytics is the outcome of this process; the use of a large amount of data to manage present scenarios and to predict future ones. The application of this new methodology in ATM is quite recent and still confined to research and limited software implementations but the potentialities are very interesting, especially in the field of safety, trajectory calculation engineering and development of new tools for ATCOs.

Introduction

1.1 Big Data is one of the main topics in research activities in different areas. In the past, it has been used for business reasons, especially in the retail industry, in order to understand and anticipate to customers’ demand and market trends. Today, Big Data is considered an essential resource for any economic and political field; collecting and analysing data became a major task for all kind of operators in order to study the future scenarios of their activities and implement the necessary actions to better meet the forecasted changes in their business environment.

1.2 Air Traffic Management is one of the new fields where Big Data has been identified as an essential tool to be used to meet the future needs for the use of the airspace, aviation infrastructures and new technologies with important effects also on the Air Traffic Control sector.

Discussion

2.1 What is Big Data?

2.1.1 Definition

“Big Data” is a field that treats ways to analyse, systematically extract information from, or otherwise deal with data sets that are too large or complex to be dealt with by traditional data-processing application software.

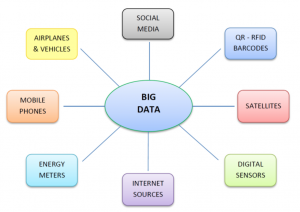

Big Data challenges include capturing data, data storage, data analysis, search, sharing, transfer, visualization, querying, updating, information privacy and data source.

Current usage of the term ‘Big Data’ tends to refer to the use of predictive analytics, user behaviour analytics, or certain other advanced data analytics methods that extract value from data, and seldom to a particular size of data set.

2.1.2 The Genesis of Big Data

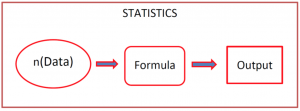

Collecting and analysing data has always been a human activity. Basically, this process was restricted to observing behaviours and events, registering them and then making a simple analysis depending on the needs of the final user. In modern times this activity was mainly related to a new scientific subject known as statistics.

This is normally considered a branch of mathematics, and the purpose is to collect data in order to analyse, interpret, explain and present it in the most correct and useful way according to the finality of the study.

In the beginning this science was used for basic research using mathematical models which could help to study data for very limited uses.

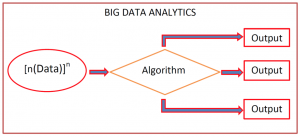

The shifting from a simple to a more elaborate data analysis started with the contemporary times, when computer science and the improvement in mathematical and physical applications started giving stock to this subject.

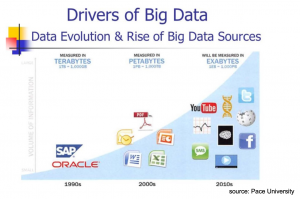

The continuous implementation of new software able to collect, manage and elaborate an enormous quantity of data allowed for the creation of the ‘Big Data science’, which grew from a few dozen processed terabytes (10^12 bytes) of information to many zettabytes (10^21 bytes).

2.1.3 Big Data is Power

Collecting a big amount of data that can be analysed and elaborated depending on the needs represents an important asset which can give a lot of advantages and can make an enormous difference among competitors. One of the first examples of collecting data is the introduction of fidelity cards in the retail market. The use of this method allowed companies to know specific personal details and preferences of their customers, which helped to decide on the companies’ strategy in defining products and distribution structure able to meet the customers’ needs and expectations. This important research tool soon became a real business affair; many service companies appeared in the market, offering market studies to collected data and giving businesses solutions more adherent to markets requests. These companies became more and more important and nowadays represent a big part of the third sector. Forbes calculated that the Big Data industry today is worth more than $200 billion, with most of these realities present on the stock exchange market. The ability to collect data and process it in order to predict future changes in any economic environment is enough to explain the kind of precious resource this activity can represent. It is a matter of power which can be identified in: owning, processing and using data in order to predict future product offers, distribution systems, company structures and strategies, resource needs and optimization of work force.

In aviation industry for example, airline associations collect passenger’s data to change the commercial offer according to new trends and requests. Airlines can define their future strategies through new services and flight connections according to customers’ trends.

In the ATM field, Air Navigation Services providers (ANSPs) collect data from air traffic to define a more efficient network, to implement new technologies, to accommodate new demands in services, to redesign airspace, develop procedures, and to increase productivity through a better use of the infrastructures and human resources.

In this perspective Big Data is power: financially and commercially, which can help to be successful on the market, reduce costs, increase profits, be more sustainable and efficient.

2.2 Data analysis

2.2.1 Collection of Data

Collecting data and storing it is the first step of the process. Data can be obtained from different sources. The end user can obtain information directly using different methods or extract it from the systems that are used for delivering services. Otherwise, they can obtain or buy data from other companies, associations or institutions.

The second step is the storage of data, which can be seen as a simple duty but actually it is not. The amount of information that must be stored is very high, this calls for the availability of servers or cloud space big enough for this activity. Secondly, the used storage system must be safe and protected, in order to avoid possible infiltration or manipulation from other unauthorized subjects.

2.2.2 Data Analysis Methods

Nowadays, there are several technologies developed to process large amounts of data and to analyse it according to different needs.

Business intelligence and cloud computing are the most common technologies for Big Data analysis. These are often used in association with other technologies of mathematical nature, in order to increase computing capacity and quality of the output results.

2.2.3 Big Data and Artificial Intelligence

The use of high-performance software for processing data reached such a level that most of the analysis methods can be a satisfactory tool to obtain the requested results. The limitation to this kind of approach is still represented by the analyses of elements that must be continuously compared with so they can be updated, evaluated and used effectively. The non-stop processing and updating can take long time, especially with Big Data, and if not done in the correct way it can lead to incomplete and unsatisfactory results. It is in this perspective that the combination of traditional computing methods in addition to Artificial Intelligence (A.I.) is considered as a valid solution to the improvement in Big Data analysis science.

A. I. in fact represents a fast and simple way to update information independently and make scenarios as realistic as possible, allowing the prediction and definition of coming scenarios with the highest possible precision. This can be possible because A.I. uses a learning approach method in the definition and treatment of information, modifying the final information according to the predicted characteristics of data based on historical issues regarding the same information, and potential environmental changes could be in the algorithm. The output data to be used for the final analysis will be more accurate and relevant for any kind of processing, allowing the interpretation, explication and presentation of results closer to present and future reality.

Note: Substantial and outstanding information regarding A.I. can be found in WP92-2020.

2.3 Big Data in ATM

2.3.1 Availability of Data

The application of Big Data analytics to ATM is very new. The first results of this new science applied to aviation show that there is a great potential for future implementation, and studying methods in this field. Collecting aviation data is considered to be quite easy, because all activities are already computer managed and all information is stored for post-analysis and investigation. The relevant data come from airlines, airport operators, air navigation services providers, regulators, and aircraft. The analysis focuses mainly on inputs coming from flight plans, airport capacity and configuration, surveillance data, quick access recorder (QAR) data and aircraft maintenance, and safety reports. Additionally, other information such as surveys, transactions, social media, travel industry reports, etc can be included in the research model.

2.3.2 Sharing of Data and Security

The ATM environment is constantly monitored and updated using data analysis methods needs and may require the acquisition of information from different sources and operators. This kind of activity is already partially in place in order to permit the management of air traffic both on the ground and in the skies.

The implementation of new analysis applications will require a larger amount of data than is currently shared by the different actors in the ATM system. This means that it will be necessary to create a new data storage systems which are easily accessible, wherein all the operators can input all the information they have in their own systems with all the necessary security and safety conditions in order to avoid an improper use of data and easy access to unauthorized subjects.

Moreover, the utilization of such information should be consistent with the data protection principles contained in ICAO Annexes 13 and 19. There should be no intent to process the data for punitive purposes, to ensure an adequate ATCOs’ protection from inappropriate and unfair actions.

As the knowledge obtained with the analysation of this data is very powerful, the interest to gather this information from other entities, both for legal or illegal activities, is very high. Data storage systems must therefore be protected at all levels, to ensure operators that data will be used only for predetermined purposes and data should be encrypted at the highest possible degree. (ICAO Annex 19 Safety Management)

2.3.3 Identification of Useful Data in ATM

The creation of a data storage system represents an interesting and fundamental aspect of the Big Data analytics. The huge amount of information provided by the different stakeholders will be very inhomogeneous and differentiated. For this reason, there will be the need to identify the necessary data to be used in the analysis model according to the finalities of the research.

For ATM purposes, for example, most of the required information will include:

- Radar tracking data

- Flight plans data

- Aircraft cockpit data

- Airlines’ flight monitoring and maintenance data

- Airport ground movement and handling data

- Safety reports

- Any other information according to the peculiar needs

It is of great importance that putting too much data in the study is avoided and analysis should be limited to the essentials, in order to avoid a useless workload which could create problems in the data processing activities and the correct elaboration of the results.

Moreover, it is essential that used data is compatible and homogenous, in order to avoid comparing and processing information that has been collected under a different situation or unit system; this would lead to an invalid output result and erroneous conclusions.

2.4 Examples of the Usage of Big Data

2.4.1 Integrated systems: SWIM and A.I.

System Wide Information Management (SWIM) is a global Air Traffic Management (ATM) industry initiative to harmonize the exchange of aeronautical, weather and flight information for all airspace users and stakeholders. Eurocontrol presented the SWIM System concept to the Federal Aviation Administration (FAA) in 1997 and in 2005. The ICAO Global ATM Operational Concept adopted the SWIM concept to promote information-based ATM integration. SWIM is now part of development projects in the United States (Next Generation Air Transportation System, or NextGen), the Middle East (GCAA SWIM Gateway, and the European Union (Single European Sky ATM Research or SESAR).

The SWIM-program is an advanced technology program designed to facilitate greater sharing of ATM system information, such as airport operational status, weather information, flight data, status of special use airspace, and restrictions.

SWIM can be implemented using custom-made or commercial off-the-shelf hardware, and software to support a Service Oriented Architecture (SOA) that can facilitate the addition of new systems and data exchanges and increase common situational awareness.

The integration of SWIM with Artificial Intelligence systems could increase the manageability of data, a continuous comparison to historical data, an increase in predictability of real trajectories and deviations from standard procedures or expected performances.

Note: For more information on SWIM, see IFATCA 2018 WP85 – B.5.4 – SWIM Technical and Legal Issues.

2.4.2 SafeClouds

SafeClouds is the largest aviation safety Big Data analytics project under the Horizon 2020 research and innovation programme and is managed by INNAXIS, a Spanish research institute. It is run by a consortium formed by airline industry, ANSPs, aviation authorities, and research institutions. Data protection is one of the most important aspects of the project and it is achieved through the use of cryptography methods that guarantee accessibility and security levels at the same time. The main processed safety topics refer to airprox, controlled flight into terrain, unstable approach, and runway safety issues; the analysis of a great quantity data can help to draw a clear picture of safety problems and the contributing factors to this kind of events, creating a useful supporting tool for ATCOs, pilots, and systems able to increase safety at all levels.

2.4.3 Automatic Safety Monitoring Tool (ASMT)

ASMT is a new safety tool developed by Eurocontrol to help in the collection and analysis of safety data; the information is obtained from ATM systems and includes radar tracks, flight plans and system alerts. In particular, the processed parameters are concern infringements of separation minima, airspace penetration, altitude deviation, rate of closure, safety warnings like short term conflict alert (STCA) and airborne collision avoidance system (ACAS) alerts.

The large amount of data collected in this way is stored in order to be used as a post- analysis study by safety experts. The data must be able to managed with the aim to help in the interpretation of the operational reality, and not to investigate single events, or become a study method in contrast with the just culture concept.

This tool should allow the realization of an enhanced trajectory-based network with an increased flexible use of the airspace within a safer environment.

Note: For more information on SMT, see IFATCA 2018WP307 – C.6.8 – A review of the Policy on the ATM Safety Monitoring Tool.

2.4.4 Eurocontrol ATC2ATM Programme

The continuous increase in air traffic and the need to accommodate a higher level of air traffic control demand require the creation of new tools that can help to maintain safety while improving controller and sector productivity.

Maastricht Upper Area Control Centre (MUAC) is trying to achieve this goal through the Sector Opening Table Architect, a post operational analysis and business intelligence tool. This is part of a wider programme called ATC2ATM with a goal to permit greater efficiency through a direct and efficient collaboration between capacity management and ATC functions. In addition to Sector Opening Table Architect, MUAC is using new traffic prediction improvement (TPI) software, being part of the ATC2ATM programme as well. TPI is able to analyse the most likely flown route by an aircraft comparing historical flight data; the learning basis of this system are two years’ historical trajectories with extra inputs represented by time, day of the week, status of military areas and other airspace restrictions, weather etc. The tests conducted by Eurocontrol revealed that TPI reduces the degree of error between the predicted trajectory and real flight, on average, by half. The next step will be to include information about the vertical prediction, to determine how fast a flight will climb or descend. TPI should also help to give more precise information about airspace occupancy once the flight is live, thus allowing the network management position to plan in advance the airspace sectors’ configuration and to use in a more efficient way also the resources.

The use of this methodology, and the associated tools or systems, could create a change in the workload where the sectors/positions will be opened or closed in a way that there are no more low traffic periods.

In addition, different work shifts could be implemented with short time change in opening and closing times, possibly decreasing the capacity of controllers to plan their social and family commitments and to have a more regular life.

In this case also a deeper study of the shift planning and sectors/positions organization should be put in place, in order to protect ATCOs health and professional capacity.

2.5. Big Data and Air Traffic Control

2.5.1 Data availability for Air Traffic Control Officers

ATCOs use a great deal of information on a regular basis, most of the time directly displayed on the screen or obtainable using specific control buttons. The data they observe is real time, with the only exception to view some historical information related to a specific live flight. On the contrary, Big Data analytics can represent a useful tool to have precise indications on short time prediction traffic movements, mainly on the ground but also en-route.

For ground/tower controllers the turnaround and taxi times could be more predictable with very small deviation from reality, especially under particular conditions, such as when the model study includes operations like works on taxiways and aprons, de- icing, number of queuing aircraft, single runway operations, different category of aircraft, weather, departure slot times, safety events and inspections, etc.

For en-route traffic the presentation of the possible new trajectories for arrivals and departures could be an option. The new trajectories,take all possible elements that can influence it, like deviating from the flightplan airspace restrictions and engaged military areas, presence of special air traffic that can interfere with the climb/descent, weather conditions, airport sequencing, etc into account. Also, information about expected performances regarding linear and vertical speed, top of descent, etc could be predicted. All these predictions could be available thanks to the analysis of historical data referred to each specific flight or aircraft, elaborated with all the features included in the model, extracted and interpreted according to the present needs.

2.5.2 Presentation and Customization of Data for ATCOs

One of the most important technical aspects for ATCOs is the presentation of data on their working positions. Too much information being displayed could cause confusion and an increase of workload, especially during peak times. The provision of tools and data is obviously necessary and important, but it must be limited to what is necessary for tasks. It is known that a massive amount of information given at the same time can be difficult to understand and process by a human mind, determining a work overload, such as dealing with wrong or lacking of information necessary to carry out a determined duty.

It is important that new data is presented in the correct way, only when necessary and without diverting attention of the operators; the human machine interface must be friendly and strictly adapted to real working needs. The availability of extra data which can be consulted must be considered as an option that ATCOs can have at their own disposal, activating it only with dedicated command functions. The presentation of automatic information, on the contrary, must be shared and accepted by the operators so that they can work efficiently and safely according to the work position, role and needs. It is also known that each ATCO has different ways of working and, even if covering the same position, they like to customize their layout differently by adding or hiding some information provided by the systems. This peculiarity has direct connection to the responsibilities of an ATCOs and the use of data presented by the ATC system.

Conclusions

3.1 Big Data analytics is a modern method of treating, processing, interpreting and presenting a large amount of information according to the final purposes of the study.

3.2 The use of this scientific approach is new to ATM, but its potentialities are very interesting and promising.

3.3 Probably the best application of Big Data can be associated with the management of air traffic at business and strategy level. In real time operations it can also be a useful method by helping the industry to create new tools for ATCOs for a better prediction of traffic, tactical solutions and utilization of airspace and airport facilities. The comparison of historical information with live trajectories can determine an increase in safety and efficiency.

3.4 When using business analytics to optimize sector and position configuration to match the demand, due attention should be given to controller workload in order to provide recovery time in between periods of high traffic loads.

3.5 Controller tools should be meeting controllers’ needs, to avoid displaying of too much information and consider the possible customization of the working position according to individual personal needs.

Recommendations

4.1 It is recommended that this paper is accepted as information material.

References

N.K. Ure et al, Data Analitycs in Aviation, ITUARC.

S.Pozzi et al, Safety Monitoring in the Age of Big Data, ATM Research and Development seminar (ATM2011).

H.Naessens, How Big Data is helping meet Europe’s capacity challenge, Skyway n.68 2018.

CANSO, Knowledge is power, Airspace n.42 2018.

Eurocontrol, Big Data to improve capacity planning and ATM efficiency, press release 2018.

Eurocontrol, Safeclouds uses Big Data analytics to drive aviation safety, press release 2018.

D. Pérez Pérez, Big Data ATM, CRIDA 2018.

IFATCA, Technical and Professional Manual, ver. 64.0, July 2019.

Wikipedia, Big Data, Definition, online encyclopedia.

ICAO, Annex 13, 11th edition, July 2016.

ICAO, Annex 19, 2nd edition, July 2016.

Wikipedia.