56TH ANNUAL CONFERENCE, Toronto, Canada, 15-19 May 2017WP No. 87CybersecurityPresented by TOC |

Summary

The use of automation and networking means that cybersecurity needs to be considered. The implementation of cybersecurity has arrived late in aviation but the concept can no longer be ignored. Security is not only based in programming but also in the users being aware of the need to protect the information and be alert against vulnerabilities and social engineering.

Introduction

1.1 Our world relies increasingly on technology. Big amounts of data are digitalized to be processed by computerized systems, stored as numerical files and sent and received through communication networks. Basically, the security problems faced in the digital world are the same those faced in the physical world: to ensure that only authorized people have access to sensitive data or can modify them and to deny access to unauthorized people. When referring to such security issues in the digital world the term cybersecurity is used.

1.2 The aviation sector has only recently begun devoting significant attention to cybersecurity but some other industries have dealt with it for a long time. Many of the challenges, solutions and even mistakes made by them may be of use to tackle the implementation of cybersecurity systems.

1.3 This paper will try to identify some typical vulnerabilities in automated systems, showing examples of how they have been exploited at times and the way they could be used against the aviation sector. Finally, the efforts to implement solutions in aviation will be discussed.

Discussion

Vulnerabilities in digital systems

2.1 The purposes of cybersecurity are not different to the ones of plain security. In both cases the threats vary from the unauthorized access to sensitive information to an attacker taking control of a critical system or denying access to legitimate users but cyber-threats have some peculiarities to be considered (Bruce Schneier. Secrets & Lies, Digital Security in a Networked World. Wiley Publishing. 2000).

- Automation: Computers excel at performing dull, repetitive tasks. A human attacker would be discouraged if a lock protected by a password resisted one thousand attempts to guess the key word but a computer will go on trying once and again until completing the task no matter if it takes hours, days or even years.

- Distance: Communication networks have made possible for a cyber attacker to be thousands of kilometers away from its target making the task to trace and locate the attacker a very complicated one.

- Propagation: Once a vulnerability is discovered and a tool to exploit it is created, it may be disseminated via the internet multiplying the number of attackers who can make use of it even if not having the skills to understand the functioning of the attack application.

2.2 Intrusion in a system to steal data is one of the most common examples of the risks associated with computerized systems. Some intrusions have been widely publicized due to the significance of the firms attacked and the amount of customers affected: the Sony PlayStation network was hacked in 2011 by attackers who had access to data of 77 million users (https://www.theguardian.com/technology/2011/apr/26/playstation-network-hackers-data) while passwords and other data from 500 million Yahoo! accounts were stolen in 2014 (https://www.cnet.com/news/yahoo-500-million-accounts-hacked-data-breach/).

2.3 Even systems theoretically well developed may be vulnerable due to mistakes in implementation. An example is the system of control and distribution of drinking water of Oslo, Norway. It was supposed to be protected against intrusion but in September 2009 it was found that it could be accessed via Bluetooth because nobody had thought about changing the default settings and password of the router (https://diarioti.com/contrasena-para-sistema-online-de-suministro-de-agua-potable-%C2%940-0-00%C2%94/30266).

2.4 Even if properly implemented, a password protected system can be attacked by bruteforce, trying one password after another until eventually finding the correct one. This may require a long time and powerful computers but the task may be simplified if a weakness is found in the algorithms or the password is a weak one that allows a dictionary attack which tests some passwords first as most probable.

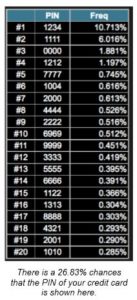

Unfortunately strong passwords are difficult to remember so users often choose weaker but easy to remember passwords. The public exposure of passwords after attacks like the ones mentioned in 2.2 has provided material to the experts. They have found that some of the most commonly used passwords are as easy to guess as 12345 or… the word password. A study by the firm DataGenetics on 3.4 million passwords composed of 4 numbers (the ones used in credit cards) showed that 1234 is the most usual one (10.7% of the passwords) followed by 1111 (6%) and 0000 (1.9%) (https://www.datagenetics.com/blog/september32012/). A person finding a lost credit card would have more than 18% chances of getting money from an ATM just trying these 3 numbers.

2.5 Weak passwords are often selected because they are easy to remember but sometimes the systems are weakened on purpose by decisions taken by their administrators. Many examples may be found but probably the most shocking one is the decision concerning the code for nuclear weapons in the USA during the Cold War. After President Kennedy decided that every nuke should have an additional layer of security to prevent unintentional use the ICBM force (Inter Continental Ballistic Missile) adopted a single code for every nuclear missile. To avoid the problem of forgetting the code the chosen one was 00000000 (https://www.globalzero.org/files/bb_keeping_presidents_in_the_nuclear_dark__episode_2_the_siop_option_that_wasnt_april_may_02.17.2004.pdf). Proper security locks were not activated until 1977.

2.6 Users are often the weakest link in the security chain. Even a well protected system may be weakened if the users don’t follow the security procedures which are usually cumbersome. A typical way to eavesdrop a secure line is to create interferences so the users will be unable to connect and will instead use an insecure channel to ensure communication. This kind of behaviour is common even for highest-level personnel. A former CIA Director lost his security clearance when it was learnt that he used to carry secret documents in an insecure laptop to work at home (https://www.washingtonpost.com/wp-srv/national/daily/aug99/deutch21.htm).

2.7 The security of a computerized system may be compromised by other means than technical attacks. A typical example is bad protection of passwords. The passwords to access the network of a R.A.F base were compromised when a photo in a media report accidentally showed a piece of paper with user names and passwords to give access to a protected system posted on a wall (https://www.theguardian.com/uk/2012/nov/20/prince-william-photos-mod-passwords).

2.8 Social engineering is another common way to obtain access to a system. Social engineers can talk information out of employees of a company just by using their social skills. Valuable intelligence may also be found in social networks. By collecting several pieces of apparently harmless details a social engineer can build very complex attacks. For example, the names and position of several key people in a company can be easily found in the internet or collecting business cards in a conference while social networks may be a perfect way to find out when a particular person is out of town in order to impersonate him or her in a phone call. Finally, social skills may be of use to talk someone into allowing access to a private network, e.g. by pretending to be a colleague from another city that needs to check their email.

This method was used in 1994 by Anthony Zboralski, a French hacker, to impersonate an FBI representative at the US embassy in Paris. He called the FBI in Washington and persuaded the person who answered the phone to explain how to use the FBI’s phone conference system. He used it for four months expending 250,000 $ in phone calls before being detected and eventually arrested (https://www.liberation.fr/futurs/2005/02/21/le-hacker-qui-a-craque_510325).

According to the security consultant and former cybercriminal Kevin Mitnick who was once reputed as the best hacker in the world, there is no technology that can prevent a social engineering attack (Kevin Mitnick & William Simon. The art of deception. Wiley Publishing 2002). Giving testimony before the US Senate in 2000 Mitnick stated that it is much easier to trick someone into giving a password than to spend the effort to crack into the system.

2.9 It is tempting to think that a well informed system administrator and a correct security policy would protect a company from any of the attacks and vulnerabilities stated above. Nonetheless the best protected company may be compromised by a talented and skilled attacker. How much talent and skill is needed to break into a top security firm? A combination of social engineering and the exploitation of an unpatched vulnerability was all that was required to hack one of the most reputed companies in the cybersecurity business: in March 2011 RSA announced that they had been hit by a cyberattack which in the end meant a cost of 66 million dollars plus several headaches for many of its customers and the company itself.

Complete details of the attack were not released but the basics are known. Several employees of RSA got a phishing email with the subject line “2011 Recruitment Plan”. One of them found the message in the junk mail folder and opened the excel file attached which worked as a trojan horse that took advantage of a vulnerability in Adobe’s Flash software to install a remote control application. Having access to the network of RSA the hackers were able to enter different systems where they could access other accounts until they acquired access to sensitive data. Finally they spirited several files out of RSA to a remote location.

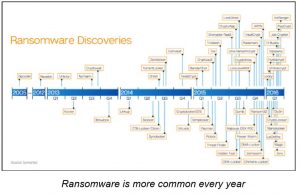

2.10 Taking control of a system or stealing data are not the only threats. An increasingly common cybercrime is the so called ransomware: the attacker manages to run into the system an application that encrypts the data stored on the network. The data remain encrypted until a ransom is paid. An example is the ransomware assault of the Hollywood Presbyterian Medical Center. All the files in the hospital network were encrypted until the management agreed to pay a ransom of $17,000 (https://www.latimes.com/business/technology/la-me-ln-hollywood-hospital-bitcoin-20160217story.html). Even some police departments have been hit by ransomware and have had to pay a ransom to have their systems back (https://www.cnbc.com/2016/04/26/ransomware-hackers-blackmail-us-police-departments.html).

2.11 An attack is not always aimed to take control of a system nor even to penetrate it. Denial of Service (DoS) is the situation that happens when it is not possible to establish a connection to a server which is too busy at the time. This attack is very effective when the attacker is able to previously take control of many computers (maybe thousands of them) to form a so-called botnet at his command that requests to connect simultaneously to the target server thus overloading it. This is called a Distributed DoS attack (DDoS). Note that in such cases the server is taken down from the outside: there is no intrusion in the targeted server.

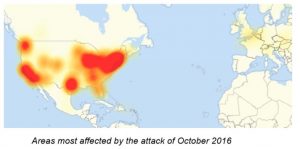

The biggest DDoS so far took place on October 21st 2016 (https://en.wikipedia.org/wiki/2016_Dyn_cyberattack). It was directed against some internet servers used to route the traffic to the proper destination. It is estimated that the attack involved a botnet of around 100,000 infected devices (not only computers but printers, webcams, baby monitors…) which generated a traffic of 1.2 terabytes per second against the targeted servers. Among the affected services were some very popular ones like the BBC, CNN, Amazon, Paypal, Twitter and Visa. It must be remarked that these companies were not the direct target of the attack but mere users of the attacked servers.

2.12 A last consideration is that complexity is the worst enemy of security. The more automated a system is the more complicated it is. A theoretically secure concept must be translated to algorithms which have to be implemented through lines of code to form an application which runs on an operating system installed in computers belonging to networks depending on system administrators and operated by users. Every step has its own vulnerabilities. It is estimated for example that commercial software has 5 to 15 errors or “bugs” per every thousand lines of code, after all tests and quality control, each bug being a potential vulnerability (Bruce Schneier. Secrets & Lies, Digital Security in a Networked World. Wiley Publishing. 2000). An operating system like Windows XP had 45 million lines, Windows 8 around 50 million lines of code while the version of Microsoft Office for Mac OSX issued in 2006 had around 30 million lines of code. It is just impossible to patch all the vulnerabilities but even if possible, the system would still be vulnerable to social engineers.

Aviation and cybersecurity

2.13 Surprisingly. while there is much information about cyberattacks against all kind of industries, there is almost nothing related to aviation. Nonetheless EASA has reported a number of 1,000 attacks per month in the aviation sector (https://www.euractiv.com/section/justice-home-affairs/news/hackers-bombard-aviation-sector-withmore-than-1000-attacks-per-month/) which seems a low number considering the size of the industry (as a comparison, it is estimated than only in Spain there are 4,000 attacks per day (https://www.lavanguardia.com/vida/20160317/40510877283/ciberdelincuencia-ciberataquesciberespionaje-nube.html)). It must be said that these figures are mere statements with no details about the specific type of events.

2.14 Very few events have been reported in aviation. One of them, the hacking of the system of LOT airline was behind the cancellation of at least 10 flights in Warsaw airport in June 2015 (https://www.theguardian.com/business/2015/jun/21/hackers-1400-passengers-warsaw-lot). Even if the attack caused delays and monetary losses, safety was not affected.

A more dangerous event was reported in May 2015 when a cybersecurity expert claimed to have hacked into various aircraft systems (https://edition.cnn.com/2015/05/17/us/fbi-hacker-flight-computer-systems/). According to himself he had been able to issue a “climb” order to one of the hacked planes. This claim has been disputed and its credibility is still not clear but it was a starting point to focus on the security of networks used in planes. Bruce Schneier, a reputable cybersecurity expert wrote an article about the incident stating that newer airplanes have a serious security issue: the avionics and the entertainment system are on the same network which is, according to him, a very stupid thing to do (https://www.schneier.com/blog/archives/2015/05/more_on_chris_r.html). Sources from the aircraft manufacturer denied that both systems shared the same network.

2.15 News of failures like radar outages or automation crashes have been sometimes reported as cyberattacks by several websites. Nonetheless, this explanation has never been confirmed by any service provider, being the failures reported as merely technical.

2.16 Even if no catastrophic cyberattack has been confirmed, the aviation community has started to study the problem. Cyber threats were mentioned in Annex 17 (Security) for the first time in a recommended practice added in 2011.

| 4.9 Measures relating to cyber threats

4.9.1 Recommendation.— Each Contracting State should, in accordance with the risk assessment carried out by its relevant national authorities, ensure that measures are developed in order to protect critical information and communications technology systems used for civil aviation purposes from interference that may jeopardize the safety of civil aviation. 4.9.2 Recommendation.— Each Contracting State should encourage entities involved with or responsible for the implementation of various aspects of the national civil aviation security programme to identify their critical information and communications technology systems, including threats and vulnerabilities thereto, and develop protective measures to include, inter alia, security by design, supply chain security, network separation, and remote access control, as appropriate. |

(ICAO Annex 17, 9th edition, March 2011)

2.17 At present ICAO has declared cybersecurity a priority subject. During the 12th Air Navigation Conference held in Montreal in 2012 cybersecurity was mentioned as a high-level impediment to the implementation of the Global Air Navigation Plan. The following recommendations on the subject were adopted:

| Recommendation 2/3 – Security of air navigation systems

That ICAO: (…) b) establish, as a matter of urgency, an appropriate mechanism including States and industry to evaluate the extent of the cyber security issues and develop a global air traffic management architecture taking care of cyber security issues.

Recommendation 3/3 – Development of ICAO provisions relating to system-wide information management That: (…) c) ICAO undertake work to identify the security standards and bandwidth requirements for system-wide information management. |

(ICAO Doc 10007, Twelfth Air Navigation Conference)

Note that System Wide Information Management (SWIM) is explicitly mentioned. Peculiarities of SWIM will be mentioned later in this paper.

2.18 During the ICAO 39th Assembly, held in 2016, cybersecurity was also the main subject of several papers. A resolution on the subject was adopted:

| Resolution A39-19: Addressing Cybersecurity in Civil Aviation:

(…) Recognizing the multi-faceted and multi-disciplinary nature of cybersecurity challenges and solutions; The Assembly: 1. Calls upon States and industry stakeholders to take the following actions to counter cyber threats to civil aviation: a) Identify the threats and risks from possible cyber incidents on civil aviation operations and critical systems, and the serious consequences that can arise from such incidents; (…) i) Promote the development and implementation of international standards, strategies and best practices on the protection of critical information and communications technology systems used for civil aviation purposes from interference that may jeopardize the safety of civil aviation; j) Establish policies and allocate resources when needed to ensure that, for critical aviation systems: system architectures are secure by design; systems are resilient; methods for data transfer are secured, ensuring integrity and confidentiality of data; system monitoring, and incident detection and reporting, methods are implemented; and forensic analysis of cyber incidents is carried out; (…) |

2.19 In Europe Eurocontrol was entrusted by the State Members in February 2014 to develop, set up and demonstrate the Centralized Service # 6 (Common Network Services) of which CS 6-6 (Security Certificate Service) and 6-7 (Operation and Coordination of Network Security) are part. CSS 6-6 will work as a certification authority to provide security keys and certificates while CSS 6-7 will be composed of a European ATM CERT (Computer Emergency Response Team) to prevent and respond to cybersecurity incidents and a SOC (Security Operations Center) to collect data, analyse incidents, recommend solutions etc. CSS 6-7 will also provide service to ATM stakeholders who wish to delegate their own SOCs. A demonstrator should be ready by mid-2017 (EUROCONTROL Information Paper Inf./PC/16-03 3.3.16).

The creation of an aviation CERT is also included in EASA’s European Plan for Aviation Safety 2016-2020 (https://www.easa.europa.eu/system/files/dfu/EPAS%202016-2020%20FINAL.PDF). It is still unclear how the ATM CERT of Eurocontrol and the AV-CERT of EASA will be related to each other.

2.20 The US Government Accountability Office (GAO) issued a report in April 2015 regarding cybersecurity measures by the FAA in the transition to NEXTGEN. The report recognizes that the FAA has taken steps to protect its ATC systems from cyberthreats but still found some weaknesses. Among the recommendations of the report it is said that the FAA should develop an agency-wide threat model (GAO AIR TRAFFIC CONTROL: FAA Needs a More Comprehensive Approach to Address Cybersecurity As Agency Transitions to NextGen. April 2015). It must be noted that according to Schneier threat modeling is the first step in any security solution (Bruce Schneier. Secrets & Lies, Digital Security in a Networked World. Wiley Publishing. 2000). The following steps being to describe the security policy required to defend against the threats and to design the countermeasures that enforce the policy.

2.21 Both USA and Europe are taking steps to protect their ATM systems against cyberthreats but their efforts have started only recently compared to other industries. Cybersecurity has made routine some actions unthinkable a few years ago: we make mobile phone calls without thinking about the complex authentication and encryption protocols and algorithms that keep the conversation private and bill the cost to the correct customer. The same is true for concepts like e-commerce. If these actions are common today it is because the industry managed to implement cybersecurity to such a level that an average customer trusts the system. It is a long process that started many years ago when internet and the mobile phone industry were in their infancy. Why has aviation not gone the same way?

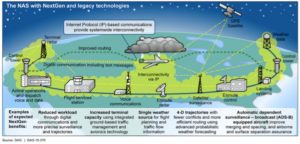

The answer lies in the obsolete structure of the system that did not require state of the art security because it could only be attacked in old fashioned ways. We still use amplitude modulation for VHF communications, navigation relies on systems designed in mid-20th century and surveillance is mainly based in radar. These systems were developed in a world not yet digitally interconnected. The transition to technologically advanced systems is the reason to address technologically advanced threats. The above picture from the GAO report mentioned before provides a simple view of the evolution of the aviation system from a point to point connected structure to a network where the elements are nodes linked through digital connections using IP (Internet Protocol). A key concept is SWIM which is basically a sort of private internet dedicated to ATM. A graphic view from the same report is the image below where the need for protection is highlighted. A network structure similar of the internet requires a security comparable to critical internet services.

Potential threats specific to aviation

2.22 So far we have discussed much information about attacks to networks in general. Now we turn our attention to potential attackers. Who may be interested in taking down any element of the civil aviation system? There are at least four kinds of menaces:

- Amateur hackers. This is the first group that comes to mind when thinking about computer generated attacks. The challenge to find a way to penetrate a protected system may be a reason for a computer nerd with high skills and motivation but most of them are not willing to cause damage nor to face the legal consequences of intruding in a critical system. Nonetheless they must not be ignored.

- Criminals. A single person behind a computer may attack thousands of potential targets often thousands of kilometers away, in another country with a different legal system. This explains the reason why scams like phishing through fake emails or ransomware (see 2.10) are on the rise. This group is potentially more dangerous than the previous one because they have an economical motivation. On the other hand, criminals try to maximize the benefits by attacking targets with a minimum costs and effort so measures of defense must be taken but no extraordinary means are needed because they will move to less protected and most profitable targets if they find proper resistance.

- Terrorists. While the usual cybercriminal is motivated by money and has no reason to create unnecessary damage, a terrorist’s goal is precisely to harm as much as possible. The high visibility of aviation events makes an attractive target for such purposes. Individual airplanes have been targeted before but computerized networks may allow attacking several planes at once or disrupting the ATM system. The will to maximize the damage makes terrorists a dangerous and fearsome threat and creates the need to use the strongest forms of cyberdefence for air traffic control, as for any other highly automated critical system.

- Cyberwar. When hostilities break out, critical infrastructures of the enemy become a target: electricity, communication, food supplies… and of course air traffic control. Automated systems are therefore targets and their infection with malware has sometimes been credited to foreign agencies. It has been acknowledged that malware may be already in place to attack critical infrastructure and that such attacks could be launched as a retaliation against other cyberattacks. This kind of rhetoric is reminiscent of the Cold War and its Mutual Assured Destruction (MAD) doctrine in such a way that the term MAC (Mutual Assured Crashes) has been suggested to explain the situation (Black Code: Inside the Battle for Cyberspace, Ronald J. Deibert, McClelland &Stewart 2013). It is almost impossible to protect a system against such adversaries because they have huge resources and skill.

2.23 What kind of attacks may be expected? This is not an easy question to answer. The ways to harm and disrupt a system are only limited by human imagination. Any of the components of the CNS-ATM system may have their own vulnerabilities. As an example, some of the possible attacks are mentioned below.

2.23.1 Communications: At present controller-pilot voice communication relies mainly on a legacy technology using a VHF (or HF) frequency modulated in amplitude. Being completely open, it is very easy to insert fake messages from anyone pretending to be a controller thus disrupting or jamming the system just by using a radio transmitter. This kind of event happened for example in Melbourne in November 2016 (https://au.news.yahoo.com/a/33130708/manhunt-underway-after-radio-transmissions-withmelbourne-flights-hacked/#page1). CPDLC would require a higher technical level for an attacker to succeed but lacking the “party line” effect of an open communication a successful attack would be more difficult to detect. A fake controller or a successful man-in-the-middle attack modifying proper clearances could have a great impact. Jamming of communications is also a threat.

- An attacker managing to send fake messages could create very dangerous situations, for example clearing a plane to a direct route without the controller being aware.

- Another way to disrupt the system would be to instruct every plane in given sector to change frequency and contact a different one. One controller would suddenly find himself or herself unable to contact planes in the sector while another would experience an avalanche of incoming calls from not expected planes.

- Both attacks above require some knowledge of the specific environment (name of waypoints or frequencies in the area) but a level change clearance would require just very basic notions of air traffic control and, in combination with some surveillance information (from public sources like flightradar24, for example) could lead to risk of mid-air collisions.

- Combination of several attacks would be even more disruptive: a level change clearance followed by a change of frequency instruction would not only change the situation in the sector but would also seriously impair the correction of the maneuver by the legitimate controller.

2.23.2 Navigation: GNSS spoofing or jamming could affect navigation systems. Due to the need of the spoofer to be close to the target it seems difficult that a threat of this kind affects commercial aviation unless the attacker is inside the plane or the attack takes place in the vicinity of an airport.

- A good example may be GBAS. A ground station is used to provide corrections to the GNSS information so the aircraft may use the now augmented GNSS signal for approach purposes. One of the advantages is that, unlike ILS, a single GBAS station can serve several runways. An attacker able to replicate GBAS signals could potentially send fake information endangering operations in the vicinity of airports using this system.

- RPAs can also be affected: Professor Todd Humphreys of the University of Texas at Austin demonstrated this possibility in 2012 (https://www.insidegnss.com/node/3131) when he managed to take down a drone by spoofing the GPS signals. But not only RPAs: in 2013, Professor Humphreys successfully tried the same technique against a yacht which was subsequently put out of course (https://spectrum.ieee.org/telecom/security/protecting-gps-from-spoofers-is-critical-to-the-future-ofnavigation). Theoretically a similar technique could be used against a plane by a device hidden in the cargo hold.

- Jamming the GNSS frequency to prevent its use may be an easier way of attack because it does not require the technically complex replication of a GNSS signal. This type of attack is just a DoS attack as described in section 2.11. Unlike the rest of the events mentioned in this section, this one has already been detected: Interference of GNSS signals has been mentioned by ICAO in relation to some incidents in the Incheon FIR (ICAO AN-Conf/12-WP/134).

2.23.3 Surveillance: Radar is still the surveillance system par excellence but ADS-B is swiftly catching up. However ADS-B signals can also be potentially jammed, faked and in general be used for attacks (Donald L. McCallie, Major, USAF: Exploring potential ads-B vulnerabilities in the FAA’s NEXTGEN air transportation system. Graduate Research Project; Air Force Institute of Technology).

- Being not encrypted ADS-B signals can be easily faked thus making it possible to insert ghost planes in a surveillance system. To prevent this threat providers like the FAA do not rely solely on the ADS-B signal received by a single antenna. Moreover the ADS-B position is correlated with a position of the aircraft calculated by TDOA (Time difference of arrival) of the signal to different ADS-B receivers. This way the ADS-B signal is used in two ways: to extract a position from the information it contains and to calculate the position using MLAT. Both positions must concur to consider the signal as valid.

- Another possible attack is the jamming of the system using a transmitter on 1090 MHz in the vicinity of a receiver to mask the signals. The use of several receivers as described above makes the success of this attack unlikely.

- It must be noted that the lack of encryption in ADS-B can be a factor in potential attacks by making public the surveillance information through services like flightradar24 thus making it available to an attacker as mentioned in 2.22.1. This possibility was no doubt considered by the FAA when they requested assistance to the RTCA to determine the feasibility and practicality of encrypting ADS-B broadcasts and transponder interrogations (https://www.rtca.org/Files/Committee%20Meeting%20Summaries/PMC_Dec_2015_Summary.pdf).

2.23.4 New ATM concepts: Finally, there are completely new concepts being put in place that open new vulnerabilities or indirect attacks.

- Remote towers, for example, offer a new target to a potential attacker. Traffic in a remotely controlled airport could be disrupted through the injection of corrupt data in the communication between the airport sensors and cameras and the remote tower. Much easier may be the simple interruption of communications by a DDoS attack which would make the controllers blind, deaf and mute. The worst scenario is a DDoS attack against a single facility controlling several remote towers that would disrupt the traffic of several airports with a single action.

- SWIM is maybe the most appealing target of a future ATM system. As described in 2.20 SWIM may be seen as an ATM-only internet. Description of SWIM operations imagine a future network where all kind of data may be available for the appropriate users: meteo data for example would be there for its use by ATS facilities and even for aircraft, which would be connected to the system in a future. Other data may be fight plans, trajectories, surveillance, etc. But using IP (Internet Protocol) means that the same kind of attacks used in the internet may be probably be used against SWIM, includes DDoS, injection of corrupt data, stealing of sensitive data or ransomware. The extension of the system to all kind of users, from ATC to small clubs of General Aviation, from airlines to meteorological services, increases the number of potentially vulnerable entry points.

- The system could also crash as a collateral damage. The massive DDoS attack described in 2.11 was not directed against any of the services that were affected but against a choke point needed to direct the internet traffic. A similar event could affect SWIM services even if the aviation system was not the the primary target of the attackers.

- The ATC service may be disrupted as a result of an attack to an indirectly related system. More and more systems are automated and controlled by a remote computer. If the air conditioning of a computer room in an ACC is handled in such a way and remotely switched off on a hot day, the temperature in the room would rise quickly due to the heat from the processors, eventually overheating the computers thus leading to a DoS.

- Finally, plain security and cybersecurity may be closely related. The best protected network is vulnerable if any of its components may be physically damaged. The fire in Chicago ACC provoked by a disgruntled contractor in September 2014 led to the facility being closed for 18 days (https://www.faa.gov/news/press_releases/news_story.cfm?newsId=17834). This event can be seen as a DoS with physical causes.

IFATCA Policy

2.24 There is no IFATCA policy that specifically addresses cybersecurity. The only related policy in a broad sense is the following one. It is intended to deal with physical interference but can be applied to a cyberattack that interferes with any system within aviation with the intention of disrupting safe and efficient operations. Paragraphs that can be applied to cybersecurity are underlined.

| LM 11.4 UNLAWFUL INTERFERENCE WITH INTERNATIONAL CIVIL AVIATION FACILITIES

IFATCA policy is: ATC personnel are entitled to maximum security with respect to the safeguarding of personal life, operational environment and the safety of aircraft under their control. If, during unlawful interference with Civil Aviation, the appropriate authorities instruct the Controller to deviate from, or violate, the ICAO rules, he shall in no way be held legally responsible for carrying out such an order. All orders which imply a deviation from the established air traffic rules shall be conveyed through the appropriate authorities, normally the immediate superior, and always through the authority responsible for the provision of Air Traffic Services. Such orders shall always be issued in written form, clearly identifying their origin and authority, and retained for investigative purposes. The Air Traffic Controller on duty shall be granted relief from his working position when the conditions stated in para. 4.3 above are not followed, or when he considers the content of the order wrong or criminal. During unlawful interference against ATC facilities, or its threat, services may be withdrawn. Measures shall be included in national or international contingency procedures, designed in such a manner, to ensure there will be minimal disruption of service. Member Associations shall also urge their governments to ratify the existing protocols, conventions and treaties on these matters, to make them available to whom it concerns and to refrain from any course of action contrary to those rules. Member Associations should seek formal agreement on the conduct of an Air Traffic Controller during situations of unlawful interference and the adoption of contingency procedures during such situations. IFATCA will undertake, through its Executive Board, to transmit the contents of this policy to the appropriate international organisations, namely the United Nations, ICAO and the ILO, and also regional organisations who may be concerned with these matters. |

(Page 4 2 4 34 from the TPM (Christchurch, 1993))

A holistic approach to security

2.25 To summarize the situation, important stakeholders as ICAO, EASA, Eurocontrol and the FAA are beginning, even if late, to address this issue. The experience of other industries in the implementation of cybersecure systems can help aviation to build a solid one. Along the precedent paragraphs we have seen the importance of having not only a technically well built system but also of it being well implemented and the users not circumventing the security measures to benefit from easier and faster ways of use. If the user is compelled by the system to use strong passwords or two-factor authentication we can be tempted to think that no more caution is needed but we would be wrong.

2.26 Along this paper several examples of security failures have been explained, the vulnerabilities being at any level. Security does not exclusively rely on the algorithms and keys of programming. According to cryptography consultant Bruce Schneier:

(…) I found that the weak points had nothing to do with the mathematics. They were in the hardware, the software, the networks and the people. Beautiful pieces of mathematics were made irrelevant through bad programming, a lousy operating system or someone’s bad password choice (Bruce Schneier. Secrets & Lies, Digital Security in a Networked World. Wiley Publishing. 2000).

Schneier summarizes this view in a single sentence:

Security is a process not a product.

The consequence is that, not being a product, security cannot be bought. When a system is being put in place, care must be taken in every implementation step and during every operation day at every level.

2.27 Considering this view, the user may also help to improve security. Strong algorithms are the domain of engineers as well as secure implementation, robust hardware and software and solid networks but the choice of a good password and the refusal to give any relevant piece of information to someone who can be an attacker using social engineering techniques must be done by the user. As a consequence, end users must understand the basics of security and the appropriate protocols and behavior to avoid the weakening of the system, meaning that training on the subject must be provided.

Conclusions

3.1 A cyberattack against a highly technological system has the potential to disrupt or interrupt the service provided by the system putting also safety in danger. Attacks to automated system may have different purposes: stealing information, taking control of the system, insertion of corrupt data or denial of access to legitimate users.

3.2 Security is a process, not a product. Accordingly it cannot be bought and must be build every day at every level from designers of the system to final users. It does not concern only technical systems but also the use of good practices by the users to prevent threats like dictionary attacks or social engineering. Some training on the subject is desirable.

3.3 Aviation has been relatively free of incidents related to cybersecurity so far. The increasing use of technology and network connectivity makes this kind of events more probable. Accordingly, the 12th Air Navigation Conference ICAO declared cybersecurity a high-priority subject and the 39th assembly adopted a resolution to address the issue.

3.4 Steps have been taken to enhance cybersecurity by organizations like the FAA or EUROCONTROL but there is still a long way to go, being aviation in general behind other industries that proceeded to digitalization much in advance.

3.5 There is not a specific IFATCA policy on cybersecurity but the existing policy about unlawful interference may be applied to the subject.

Recommendations

4.1 It is recommended that:

IFATCA Provisional Policy is: Compromised cybersecurity poses a significant risk to safety in aviation. IFATCA considers intentional cyberattacks to be a form of unlawful interference.

and is included in the IFATCA Technical and Professional Manual.

4.2 It is recommended that a review of the above, the existing unlawful interference and related policies is placed on the work program of TOC and PLC for the 2017/18 cycle.