53RD ANNUAL CONFERENCE, Gran Canaria, Spain, 5-9 May 2014WP No. 155A Better Understanding of the Linear versus the Systemic Approach to SafetyPresented by PLC |

Summary

The objective of this paper is to provide clarity concerning the following approaches on safety: explain the linear approach to safety, explain the Systemic approach to safety and create a better understanding by pointing out the differences between the linear and the systemic safety approach.

Introduction

1.1 Task and relevance of this paper

1.1.1 During the IFATCA conference in April 2013 (Sanur-Bali) IFATCA representative to the SESAR project Tom Laursen, presented Working Paper number 165; ‘Resilience and a new Safety Approach. Do we have the right model to learn from past and future events?’ Following this presentation there was a three hour debate on the subject, making it clear that there were a lot of ambiguities on the subject.

1.1.2 In this paper the following claim was made:

“Optimising safety has required us to think of new philosophies. The challenge is to further improve safety from 10-7 to even better. Various models of safety such as the Domino model, Swiss cheese model and linear model are all good for simple systems, not so good for complex systems as the cause effect relationship is also complex. A consequence of the cause and effect thinking is that we tend to separate incidents and accidents from the processes that create positive results and positive system behaviours. Therefore we create the situation where there is a difference between the processes that create a negative result compared to the process that created the positive result. When the focus is on that which goes wrong (accidents, incidents, etc.), then it is difficult to see that which goes right. In the Systemic model it is assumed that accidents result from unexpected combinations (resonance) of normal performance variability. Failure and success stem from the same source.”

1.1.3 As a reaction on this debate IFATCA president Alexis Braithwaite wrote in the post conference circular of May 2013:

“Just reading the minutes gave me the sense that this debate was equally fascinating and frustrating. As controllers and as the Federation, we are quick to say that Safety is our business. However, as a Federation we have no agreed definition of safety and therefore no agreed approach to managing safety from the controller perspective.”

1.1.4 It was decided the paper presented at the conference 2013 resulted in many unanswered questions, especially where the basic understanding of the linear and systemic approaches to safety were not clear.

1.1.5 At the conference 2013 in Bali the following recommendations were made:

- PLC and TOC in collaboration with the Global Safety Team are tasked with the provision of further guidance material to enable better understanding of a systemic approach to safety. Policy should be developed as required, to enable IFATCA to adopt a systemic approach to safety.

- PLC and TOC are tasked with reviewing existing policy and developing new policy with regards to the new approach to safety.

Discussion

2.1 Objective

2.1.1 As described above, the objective of this paper to provide clarity concerning the following accident models and approaches on safety:

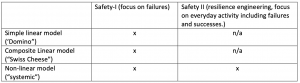

- Explain the Linear accident model, which as a variant can be used in the Safety-I approach, based on two models:

- The Linear (domino) approach: based on a causal relationship.

- The Swiss cheese model: the approach recognizes that multiple variables can affect an outcome. It presents a more complete understanding of the situation.

- Explain the Systemic accident model. This non-linear approach can be applied focussing on failures (safety I) and it is the only model that can be used in resilience engineering (Safety II):

- The systemic model aims to provide a holistic view on safety.

- Create a better understanding by pointing out the differences between the linear and the systemic accident model.

- Explain the safety I approach (focus on norms; mitigating failures).

- Explain the Safety II approach (resilience engineering in safety thinking).

- Explain the relations between the accident models and the safety approaches.

2.2 Approach

2.2.1 The authors of the paper ‘Resilience and a new Safety Approach. Do we have the right model to learn from past and future events?’ Tom Laursen and Anthony Smoker; stated the following:

“Understanding the systemic approach to safety is something that is not done by reading one paper or implementing a checklist. It is much more about changing the principles that we have used as our approach to safety for decades by exploring new ways of thinking.”

2.2.2 In the paper mentioned above the accident models, from linear to non-linear, and the approaches to safety were mixed. In this paper we will separate the two.

2.2.3 In this paper we will focus on the questions as defined in paragraph 2.1 above. To create a better understanding of the two approaches and the different models, we will put the principles in a matrix and point out the differences.

2.3 A bit of history on safety in aviation

2.3.1 Introduction

2.3.1.1 Safety is our business. All processes in air traffic come down to the question: how can we control traffic so that an optimal safety is realised, with minimal concessions to efficiency and environment? The International Federation of Air Traffic Controllers’ Association (IFATCA) lists among its objectives (Chapter 4.2):

“to promote safety, efficiency and regularity in International Air Navigation”.

2.3.1.2 But what exactly does this mean, what does safety mean in the eyes of each individual air traffic controller? How can we define safety?

2.3.1.3 ICAO published annex 19 Safety Management in July 2013. ICAO has been working on the subject since 2001. It defines safety as:

“The state in which risks associated with aviation activities, related to, or in direct support of the operation of aircraft, are reduced and controlled to an acceptable level”.

2.3.1.4 With this definition we try to identify the risks and try to reduce and control this risk in an efficient and regular manner. But is this sufficient for the approach on safety we use today?

2.3.2 Safety eras in aviation

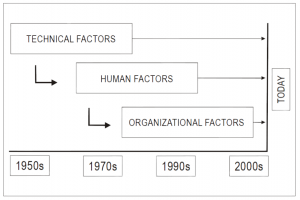

2.3.2.1 When we look at the history of the progress in aviation, safety can be divided into three eras (ICAO document 9859):

1) The technical era — from the early 1900s until the late 1960s. Aviation emerged as a form of mass transportation in which identified safety deficiencies were initially related to technical factors and technological failures. The focus of safety endeavours was therefore placed on the investigation and improvement of technical factors. By the 1950s, technological improvements led to a gradual decline in the frequency of accidents, and safety processes were broadened to encompass regulatory compliance and oversight.

2) The human factors era — from the early 1970s until the mid-1990s. In the early 1970s, the frequency of aviation accidents was significantly reduced due to major technological advances and enhancements to safety regulations. Aviation became a safer mode of transportation, and the focus of safety endeavours was extended to include human factors issues including the man/machine interface. This led to a search for safety information beyond that which was generated by the earlier accident investigation process. Despite the investment of resources in error mitigation, human performance continued to be cited as a recurring factor in accidents. The application of human factors science tended to focus on the individual, without fully considering the operational and organizational context. It was not until the early 1990s that it was first acknowledged that individuals operate in a complex environment, which includes multiple factors having the potential to affect behaviour.

3) The organizational era — from the mid-1990s to the present day. During the organizational era, safety began to be viewed from a systemic perspective, which was to encompass organizational factors in addition to human and technical factors. As a result, the notion of the “organizational accident” was introduced, considering the impact of organizational culture and policies on the effectiveness of safety risk controls. Additionally, traditional data collection and analysis efforts, which had been limited to the use of data collected through investigation of accidents and serious incidents, were supplemented with a new proactive approach to safety. This new approach is based on routine collection and analysis of data using proactive as well as reactive methodologies to monitor known safety risks and detect emerging safety issues. These enhancements formulated the rationale for moving towards a safety management approach.

2.3.3 The Evolution of Safety

2.3.3.1 These eras indicate that the management of safety evolves. As processes change the understanding of the operation of these processes changes, and therefore the management of the safety risk changes; this is an on-going development. The ideas on how to approach safety shift from a predominant approach based on focussing on failures towards an approach where the focus lies on the everyday activity, including failures and successes.

2.3.3.2 The development originates from the realisation that current systems, regarded holistically are so complicated, that foreseeing and mitigating all the possible risks are no longer an option. Resilience of the system, due to variable solutions to occurrences, can theoretically be the best way to ensure safety.

2.3.4 Developments in safety research

2.3.4.1 When we look at the history of developments in safety research the following diagram shows an overview of the studies and models applied since 1931.

2.3.5 Evolution of accident models

2.3.5.1 As the management of safety risks changes, the accident models used have also evolved. To keep things understandable we only focus on the 3 big steps taken in the development of the models.

Step 1:

The linear accident model was also called the domino theory (Heinrich, 1931) and visualized in terms of a set of dominos. As everyone knows, if one domino falls it will knock down those that follow. If the dominoes therefore represent accident factors, the model represents how these factors constitute a sequence of events where the linking of cause and effect is simple and deterministic.

(Hollnagel, 2002) This model focusses on what went wrong, but in doing so leaves out additional information that may be important. This model need not, of course, be limited to a single sequence of events but may represent either the scenario as a whole, or only the events that went wrong. The focus on failures creates a need to find the causes of what went wrong. When a cause has been found, the next logical step is either to eliminate it or to disable suspected cause-effect links. Following that, the outcome should then be measured by counting how many fewer things go wrong.

(Hollnagel, 2012) The safety I approach to safety tacitly assumes that systems work because they are well designed and scrupulously maintained. This is because procedures are complete and correct, because designers can foresee and anticipate even minor contingencies, and because people behave as they are expected to – and more importantly as they have been taught or trained to do. This unavoidably leads to an emphasis on compliance in the way work is carried out.

Step 2:

The follow up of the linear approach has led to Reason’s Swiss cheese model (or epidemic model). The basis of this model is similar to the domino-accident model, focussing on breaches of norms, which eventually lead to an incident.

(Cooper, 2001) Although the linear model has proven to be useful in identifying the sequence of events in the accident causation chain, the model has largely failed to specify how and under what conditions each of the sequential elements might interact to produce accidents. Many practitioners have continued to blame the individual for the unsafe act or merely identify and rectify the immediate unsafe conditions, rather than examining how and why the unsafe act occurred, or how the unsafe condition was created. Reason’s pathogen model has largely overcome these shortcomings. Reason suggested that ‘latent’ failures lie dormant, accumulate and subsequently combine with other latent failures which are then triggered by ‘active’ failures (e.g. unsafe acts) to overcome the system’s defences and cause accidents.

(Reason, 1987) ‘All man-made systems have within them the seeds of their own destruction, like “resident pathogens” in the human body. At any one time, there will be a certain number of component failures, human errors and “unavoidable violations”. No one of these agents is generally sufficient to cause a significant breakdown. Disasters occur through the unseen and usually unforeseeable concatenation of a large number of these pathogens.’

Step 3:

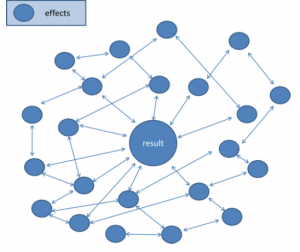

(Hollnagel, 2012) The systemic approach endeavours to describe the characteristic performance on the level of the system as a whole, rather than on the level of specific cause-effect “mechanisms” or even epidemiological factors. Instead of using a structural decomposition of the system, the systemic view considers accidents as emergent phenomena, which therefore also are “normal” or “natural” in the sense of being something that must be expected.

(Hollnagel, 2001) Human performance must always satisfy multiple, changing, and often conflicting criteria. Humans are usually able to cope with this imposed complexity because they can adjust what they do and how they do it to match the current conditions.

2.3.5.2 Based on the theories of Cooper, Reason and Hollnagel, the various approaches can be summarized as follows:

- The Linear approach bases itself on a causal relationship, one after another.

- The Swiss cheese model is based on a causal relationship between multiple predictor variables and a result. As the approach recognizes that multiple variables can affect an outcome, it presents a more complete understanding of the situation.

- The systemic model does not only recognize the effect of the predictor variable on the result, it also incorporates the correlations or the effects of the predictor variables itself on other predictor variables (variables that have an effect on the outcome; other variables). If predictor variables could be established the approach would provide a holistic (complete) view on safety. As the correlations are part of the approach, both effects that go right and wrong are determined.

2.4 Vocabulary

2.4.1 Tom Laursen and Anthony Smoker (2013, WP165) described the linear and systemic (systems) approach on safety. But how do these approaches relate to the terms Safety-I and Safety-II as used by Erik Hollnagel (2012).

- Definition Safety-I: Safety I is the condition where the number of adverse outcomes (accidents/ incidents/ near misses) is as low as possible. Safety-I is achieved by trying to make sure that things do not go wrong, either by eliminating the causes of malfunctions and hazards, or by containing their effects.

- Definition Safety-II: Safety II is a condition where the number of successful outcomes is as high as possible. It is the ability to succeed under varying conditions. Safety-II is achieved by trying to make sure that things go right, rather than by preventing them from going wrong. The focus here is on the system, including all the (cor)relations. Both failures and successes are measured to ensure optimal performance.

Based on the accident models and the definitions above, the following table can be drawn:

As linear models do not include the whole system, they cannot be applied in the Safety II concept. Theoretically systemic accident models can both be used by focussing on failures, and focussing on the system as a whole.

2.5 Safety I compared to safety II

2.5.1 Below a comparison based on literature (Hollnagel) between the approaches to safety is presented:

Safety I approach to safety |

Safety II approach to safety |

| Traditional approach on safety. | New (since the 90s) approach on safety. |

|

Safety is a condition where the number of things that go wrong is acceptably small or as low as possible. |

The number of positive events is kept as high as possible. Safety as ‘maximization of things that go right’, ‘Ensure that everything goes right’. |

|

Focus on failure, what went wrong. |

Focus is on success, what went well. |

|

Failure and success (safe and unsafe system behaviour) come from different modes of one system. |

Failure and success come from the same sources within the system. |

|

The system is robust and works because it is well designed and scrupulously maintained, because procedures are complete and correct, because designers can foresee and anticipate even minor contingencies, and because people behave as they are expected to – and more importantly as they have been taught or trained to do. This unavoidably leads to an emphasis on compliance in the way work is carried out. |

The ability to succeed under varying conditions, so that the number of intended and acceptable outcomes (in other words, everyday activities) is as high as possible. Individuals and organisations must always adjust their performance to the current conditions. And because resources and time are finite, it is inevitable that such adjustments are approximate. People are able to adjust what they do to match the conditions of work. |

|

Based on (amongst others) the linear accident model, the epidemiological accident model (Laursen, 2013), the domino theory (Heinrich, 1931) and the Swiss cheese model (Reason, 1990) and ultimately on systemic accident models. |

Based on the systemic accident model on safety and resilience engineering. |

|

The links between cause and effect are simple and deterministic. The system can be decomposed and all components of the system function in a bimodal manner. |

This approach endeavours to describe the characteristic performance on the level of the system as a whole. |

|

Accidents involve successive breaches of multiple system defences. The breaches can be triggered by a number of enabling factors such as equipment failures or operational errors. |

Success has been ascribed to the ability of groups, individuals, and organisations to anticipate the changing shape of risk before damage occurs. Failure is simply the temporary or permanent absence of that. |

|

An accident has observable and measurable effects caused by technical, human or organisational failures and malfunctions. These can be measured, mapped and mitigated. |

Instead of using a structural decomposition of the system, the systemic view considers accidents as emergent phenomena, which therefore also are “normal” or “natural” in the sense of being something that must be expected. They must be mitigated by resilience in the system. |

|

The need to find the causes of what went wrong. When a cause has been found, the next logical step is either to eliminate it or to disable suspected cause-effect links. Following that, the outcome should then be measured by counting how many fewer things go wrong after the intervention. |

Things basically happen in the same way, regardless of the outcome. The purpose of an investigation is to understand how things usually go right as a basis for explaining how things occasionally go wrong. |

|

Humans are seen as ‘fallible machines’. They are clearly a liability and their performance variability can be seen as a threat. Humans are a variable in the system. |

People are usually able to cope with this imposed complexity because they can adjust what they do and how they do it to match the current conditions (resilience). |

|

Is based on reactive assessment of failure. The response typically involves looking for ways to eliminate the cause – or causes – that have been found, or to control the risks, either by finding the causes and eliminating them, or by improving options for detection and recovery. Reactive safety management embraces a causality credo, which goes as follows: (1) Adverse outcomes (accidents, incidents) happen when something goes wrong. |

Is proactive, it results in resilience due to allowing variables in the system (operators) to intervene in the system, ensuring things can go right. |

2.6 Pros and cons of the linear approach to safety

2.6.1 The goal of the linear approach to safety is to mitigate and/ or prevent all that can go wrong. When incidents or accidents occur (unacceptable risks) we should respond and try to find the causes. We have this responsibility.

2.6.2 The linear approach to safety is based on a simplified model. This ensures the comprehensibility of the model. There can be cases where the simple relationship between cause and effect results in the answer to the safety questions in an efficient and effective manner.

2.6.3 However, in the linear approach to safety the human being is often the catalyst of an otherwise perfect system. This overly simplistic view might lead to the conclusion that the human being (or human behaviour) is the risk that needs to be mitigated. On the other hand, the system cannot work without human interference, leaving a fallible system, in the simplistic view of the linear approach to safety. When the linear approach to safety is followed after an incident, this will (often) result in an unintended blame allocation directed at the human operator. This view is incomplete.

2.7 Pros and cons of the systemic approach to safety

2.7.1 In the systemic approach to safety the system is approached holistically. This means that efforts are made to understand all the contributing factors in a system. Also the effects of one factor on the other are considered in this approach.

2.7.2 However; Implementation of the systemic approach to safety is complex: The ATM system is often considered as a ‘black box’ that cannot yet be defined by any equation. Because of this it might be too complex to realise a complete set of norms, guidelines and rules, by which operators can excel in their performance. Understanding, mapping and changing the system is very complex under the systemic approach to safety. According to the MAREA (Mathematical Approach towards Resilience Engineering in ATM) project it is at an early stage of development. The objective of MAREA is to develop an adequate mathematical modelling and analysis approach for prospective analysis of resilience in ATM (https://complexworld.eu/wiki/MAREA).

2.8 Safety I

2.8.1 In order to understand a failure, there is a focus is on what went wrong.

2.8.2 The outcome of safety I will be norms and guidelines to prevent future failures.

2.8.3 When the complexity of systems increases, the number of norms and guidelines increase. The result can be a (too) rigid system. As the danger is that a complex system cannot be fully understood, faults may be built into the system; rigidness can lead to a situation where guidelines prevent flexible solutions from being applied, which could otherwise provide resilience.

2.8.4 In accordance with the ideas behind the linear approach on safety, humans are considered to behave as instructed or as trained, within norms, leaving little room for improvisation in a non-standard situation. Therefore this approach can also lead to a reduction of safety rather than an improvement due to the reduction of autonomy. Another issue is that as systems grow in complexity, it is very difficult and expensive to keep up with developments and training for all (irregular) situations that occur. As systems and faults cannot be understood 100 percent, due to this complexity, it is important to train competencies, instead of training all possible solutions.

2.9 Safety II

2.9.1 Humans are seen as the solution to provide flexible support in varying situations, leading to a robust system that can handle situations that even the system itself is not equipped to handle.

2.9.2 In order to understand the system, there is a focus is on what went well: the ‘normal operation’.

2.9.3 Human performance is a variable of success as well as failure. Current systems are not bimodal; everyday performance is variable and flexible. Humans are the knowledge systems and source of safety. People can adjust their work so that it matches the conditions needed. As René Amalberti stated:

“Human operators are up to now the only intelligent, flexible and real time adaptable component of the system.”

2.9.4 Similar to the issues arising for the systemic accident models; Implementation of the systemic approach to safety is complex: The ATM system is often considered as a ‘black box’ that cannot yet be defined by any equation. Because of this it might be too complex to realise a complete set of norms, guidelines and rules, by which operators can excel in their performance. Understanding, mapping and changing the system is very complex under the systemic approach to safety. According to MAREA project, it is at an early stage of development. The objective of MAREA is to develop an adequate mathematical modelling and analysis approach for prospective analysis of resilience in ATM.

2.9.5 Another reservation regarding the use of Safety II is that it is based on a theoretical model, which has not yet been put to the test.

2.10 Interaction between the linear approach to safety and the systemic approach to safety

2.10.1 The global air transport system is becoming safer and safer every year. 2013 was the safest year in aviation history with regard to the number of total fatalities (251; this number was 496 in 2012) however 2012 was the safest year in aviation history with regard to number of hull losses (44; this number was 48 in 2013)(JACDEC media statement, 2013).

2.10.2 Based on the linear approach to safety 2013 was the safest year to fly in regard to the number of fatalities. Based on the systemic approach to safety we don’t know, we could just have been lucky as the number of hull losses was 9 percent higher than in 2012.

2.10.3 The foundation for the present level of safety is the linear approach to safety. There always needs to be research on incidents, accidents and unsafe system outcomes to improve the level of safety. But we need to add the systemic approach to safety to make an even more complete view on safety. The systemic approach to safety adds to the understanding of the increasingly complex and interacting systems and procedures used by ATCOs in the modern era.

Conclusions

3.1 Definition of the linear accident model:

The linear accident model is defined by an accident model, where the relation between cause and outcome is (simplistically) defined as linear. This method is best used in systems with a low complexity.

3.2 It can be concluded that the linear accident model of safety results in an over-simplistic view of a system. It aims mostly at mitigating risk by issuance of norms, guidelines and instructions for the human operator. As a Bimodal system it expects behaviour can only be right or wrong. This can, and is expected, to effectively reduce system flexibility and, therefore, ultimately safety itself.

3.3 Definition of the systemic accident model:

The systemic accident model is defined by an accident model, where multiple relations and correlations are considered and mapped. This model is imperative to understand complex models with multiple factors.

3.4 In PLC’s view, application of the systemic accident model implies the use of the linear model established norms and regulations.

3.5 It is furthermore concluded that the systemic accident model, for complex organisations and systems as the process of air traffic control, is the best way to define and establish preludes of failures, leading up to the failure itself.

3.6 Definition of Safety I:

The Safety I approach is defined by the method of ensuring safety in a system, where the aim is to minimize failure. (The number of things that go wrong (cause and effect) is as low as possible.) This is achieved by first finding, and then eliminating or weakening the causes of adverse outcomes, based on established relations.

3.7 Definition of Safety II:

The Safety II approach to safety is defined by a method of ensuring safety in a system, where the aim is to ensure resilience. Understanding that the system is too complex to foresee and mitigate all that might go wrong, the system needs to be engineered in such a way, that the variable factor (human operators) can intervene. Safety is the ability to succeed under varying conditions. Safety II requires an understanding of everyday performance.

3.8 The Safety II approach acknowledges that a system can never be fully understood. This effect alone already means that no comprehensive rules or regulations can be derived from the system; the system is seen as a black box.

3.9 It is the conclusion that neither the Safety I approach to safety nor the Safety II approach to safety should solely be applied. The approaches are intertwined in their effective application.

3.10 PLC will continue to study this subject in the future.

Recommendations

To add to the IFATCA manual the following definitions:

4.1 Definition Linear Accident model:

The linear accident model is defined by an accident model, where the relation between cause and outcome is (simplistically) defined linear. This method is best used in systems with a low complexity.

4.2 Definition systemic accident model:

The systemic accident model is defined by an accident model, where multiple relations and correlations are considered and mapped. This model is imperative to understand complex models with multiple factors.

4.3 Definition Safety I approach to safety:

The Safety I approach means that the number of things that go wrong (accidents / incidents) is as low as possible. This approach is achieved by first finding and then eliminating or weakening the causes of adverse outcomes, resulting in norms and guidelines.

4.4 Definition Safety II approach to safety:

The Safety II approach to safety is defined by a method of ensuring safety in a system, where the aim is to ensure resilience. Understanding that the system is too complex to foresee and mitigate all that might go wrong, the system needs to be engineered in such a way, that the variable factor (human operators) can intervene. Safety is the ability to succeed under varying conditions. Safety II requires an understanding of everyday performance.

References

Albert P. Iskrant (1962), The epidemiologic approach to accident causation.

J. Rasmussen (1990), Human error and the problem of causality in analysis of accidents.

R.I. Cook (1991 – 1999), Cognitive technologies laboratory, A brief look at the new look in complex system failure, error, and safety.

R.I. Cook (1998,1999,2000), Cognitive technologies Laboratory, How complex systems fail.

Dr. Dominic Cooper (2001), Improving Safety Culture: A practical Guide.

R. Amalberti (2001), Departement des Sciences Cognitives, The paradoxes of almost totally safety transportation systems.

D.D Woods and R.I. Cook (2002), Cognition, Technology & Work, Nine steps to move forward from error.

Erik Hollnagel (2002), Understanding accidents from root causes to performance variabilities.

Halberg, F. et al., (2003), ‘Transdisciplinary unifying implications of circadian findings in the 1950s’.

Emily S. Patterson, Richard I. Cook, David D. Woods, Marta L. Render (2004), Gaps and Resilience. Nancy G. Leveson (2010), Applying systems thinking to analyze and learn from events.

Erik Hollnagel (2012), ‘A Tale of two safeties’.

ICAO Annex 19 (2012), Safety Management.

Erik Hollnagel (2012), The functional resonance analysis method; modelling complex socio-technical systems. First step for systemic approach for better understanding.

Erik Hollnagel (2012), From reactive to proactive safety management.

ICAO document 9859 (2013), Safety Management Manual.

IFATCA (2013), Technical and Professional manual.

T. Laursen and Anthony Smoker, IFATCA WP 165 (2013), ‘Resilience and a new Safety Approach’.

Erik Hollnagel, Jorg Leonhardt, Tony Licu and Steven Shorrock (2013), From Safety-I to Safety-II, A White Paper, Eurocontrol.

T. Laursen and Dr. A. Smoker (2013), IFATCA The Controller October 2013, Resilience and a new Safety Approach.

Charles Vincent Susan Burnett Jane Carthey (2013), The measurement and monitoring of safety.

Steven Shorrock (2013), ‘Human error’: The handicap of human factors, safety and justice.

Inforsafety (02-2013), KLM Royal Dutch Airlines, Feature Story; resilience: Safety 2.0. https://www.resilience-engineering-association.org/ (2013)