52ND ANNUAL CONFERENCE, Bali, Indonesia, 24-28 April 2013WP No. 165Resilience and a New Safety Approach – Do We Have the Right Model to Learn from Past and Future Events?Presented by SESAR Coordinator |

Summary

The aim of this paper is to highlight the difference between a linear safety approach (and the models behind it), which has been the foundation of safety science for many decades, and the safety approach following more contemporary ideas, e.g. systemic thinking and Resilience engineering, which is labelled in this paper ‘the systemic approach’.

There is a perceived need for this today, because international organisations such as ICAO, EASA, Eurocontrol and SESAR promote a systemic approach to safety, but fundamentally continue to develop methods and tools firmly rooted in the linear safety approach. Therefore there is a need for clarification as well as need for a discussion about the subtle, but nevertheless important differences between the two approaches. Furthermore, this Work Paper will suggest that IFATCA adopts and promotes the principles and models that support the systemic model exclusively as its conception of safety.

This paper will elaborate on the consequences of the two different approaches with examples. Additionally, the paper will be used to propose a way forward for SESAR (Single European Sky ATM Research) and the SESAR-JU as well as suggestions and recommendations for IFATCA.

The ideas in this paper are triggered by IFATCA’s involvement in SESAR and the evolving and progressive developments in safety science that are being promoted by the Resilience Engineering movement as well as own experience of safety within ANSPs in Europe.

No examples have been integrated from NEXTGEN or other similar global initiatives.

Problem Statement

Technology and society have coexisted for many years. The Industrial revolution is some two hundred years old; technology related accidents were features of that epoch of society. Safety and the notion of risk is therefore nothing new – a by-product of the industrialisation of society and, it is argued some of the health and safety regulations that still inform legislation today (Silbey, 2009). The natures of the technical systems were as simple as the societies that coexisted with them and which the technology was to serve.

Aviation is a late addition to the post industrial revolution society but it finds itself with the legacy of the late industrial epoch and its conception of safety.

Traditional approaches to safety suited the nature of the systems, the structural features of the socio-technical systems at the time. They evolved to cope with the specific challenges of the technical systems – reliability, dependability and component or technical failure of the technical element of the systems.

Socio-technical systems changed however in the fifties and beyond, seeking to realise the immense potential of the technical system. The human component was, and is still today, seen in mechanistic terms. Technical system development has often seen a design that strives to optimise the technical potential of the synthesis of the human and technical system into the allocation of functions based on the ubiquitous Fitts list (Fitts, 1951) and ‘MABA- MABA’ (Dekker, 2002). ‘MABA-MABA’ is an acronym for ‘Man is bad at, Machine is bad at” and is an approach to the allocation of functions between human and technical systems (typically automation). It is a latter day application of the Fitts’s lists that was itself probably the first time that the allocation of functional between man and system in air navigation was formally defined. Such approaches assume, implicitly, that man and machine coexist in an independent harmony rather than work together.

The nature of socio-technical systems has evolved, in this evolution there are fundamental changes in the nature of attributes of how the socio-technical system functions.

Leveson (2011) argues that significant changes in the types of systems that are being designed today, in contrast with the simpler systems of the past, stretch safety engineering in the following ways:

- Fast pace of Change: Technology is changing faster than our knowledge is growing to envisage the new paths of system behaviours. Lessons from the past are ineffectual in informing the future, and at the same time our organisations no longer have memory of why systems have evolved in the way that they have.

- Reduced ability to learn from experience: The pressure to implement new systems reduces the time to test and validate new systems – thus reducing the opportunity to learn about new system behaviours

- Changing nature of accidents

- New types of hazards

- Increasing complexity and coupling

- Decreasing tolerance for single accidents

- Difficulty in selecting priorities and making trade-offs

- More complex relationships between humans and automation

- Changing regulatory and public views of safety

2.1. Old and New Socio-Technical systems

The nature of socio-technical systems has evolved e.g. in terms of their complexity and the way that components interact and, in and of themselves, these changes influence the ways that safety needs to be considered today.

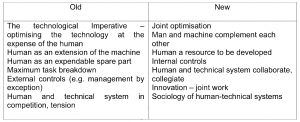

The table below encapsulates the nature of the change:

Whereas the philosophy of the past was one of optimising the technical system with the human as an adjunct, future socio-technical systems must seek to optimise the human and the technical system in collaboration. One example of this approach is that of Computer Supported Cooperative Work – CSCW. Moreover, the sociology of the system such as the organisational aspects of the social-technical system has become significantly more influential in the safe operation of the system as a whole – in a holistic sense.

A common feature of the ‘old view’ epoch of socio-technical systems is to consider the human component as a source of noise, as an errant and unreliable system component; a necessary addition that placates the societal need to have a human in control and thus responsible and accountable, and thus capable of maintaining control of the system when unexpected events occur, or the technical system is confronted with events operates outside its design environment or envelope. The human in other words, plays the part, or can, of both the hero and the anti-hero.

2.2. Two approaches to safety

The safety approach of the industrial age until the closing years of the twentieth century was founded on the notion that safety is defined by its opposite: by what happens when safety is missing. This means that safety is measured indirectly, not by its presence or as a property in and of itself, but by its absence or as Hollnagel (Hollnagel, 2012) describes it, the consequence of its absence. This approach is preoccupied and focused with what goes wrong and improved safety performance is achieved by determining the causal factors(s) that led to the event, incidents and accidents, errors, failures and other inadequacies within socio-technical systems and then removing or mitigating these same causal factors. This reduces the socio-technical system behaviour (which is often complex) to one of a simple cause and effect relationship, intrinsically linear.

Historically speaking therefore, accidents and incidents explained by such a simplistic cause and effect relationship – irrespective of the simple or complex nature of the system itself – reduces or decomposes the safety problem space to a vignette and we no longer notice the implicit model behind our thinking.

Three prominent and widely used models of this perspective of safety science are:

- The “Barrier Model” (Heinrich)

- The ‘Iceberg’ Model’ (Heinrich)

- The ‘Swiss Cheese’ model (Reason)

A number of methods have been developed that provide the means to describe and assess systems behaviours to determine hazards e.g., fault tree, bow tie, SAM, Human Reliability Analysis and Tripod, just to mention a few. These have all have been developed on the principal that systems fail because of the combination of multiple small failures which are each individually insufficient to drive a complex system to fail in some way. This analysis then yields to the quantification of risk and assumes mitigations to these risks. Frequently it is observed that such analyses use the human component as the mitigation strategy to manage this identified risk. In this paper we will call this approach the linear model.

In the linear description of safety, the human and their behaviour are constructed as an entity that can be modelled and measured with mechanistic and deterministic methods and therefore with precision. The resultant leads to huge and unwieldy ‘fault trees’ and diagrams where we can qualify and quantify the behaviour of the human, machines and the interaction between them – but reduces the safety space to, at best, an unwieldy, overly simplistic, approximation of the real world.

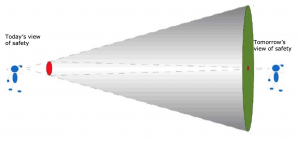

Another consequence of the cause and effect thinking is that we tend to separate incidents and accidents from the processes that create positive results and system behaviours. Thereby we create the situation where there is a difference between the processes that create a negative result compared to the process that created the positive result. This results in an inability to see the whole and we tend to focus on single events to solve problems (see fig. below). Over the last two decades the linear model has come under increasing pressure, primarily because it has been unable to account for more and more complex accidents.

Fig.1: When the focus is on that which goes wrong (accidents, incidents, etc.),

then it is difficult to see that which goes right. (Guillermo G. Garray)

As discusses previously, socio-technical systems have become ever more complex. By complex we mean specifically the inability and difficulty to predict system behaviour because of the number of interactions. The capability to predict – which is a key precept of the linear safety model – is significantly reduced as systems grow in complexity. To cope with the more complex system behaviours, i.e. accidents and incidents we face, the systemic model has emerged as an alternative. Instead of decomposing system behaviour into events over time, the systemic approach focuses on systems as a whole – holistically. This approach assumes that system properties can only be treated adequately in their entirety, taking into account all facets relating the social to the technical aspects (Ramo and Simon, 1973) and their emergent properties. There is no or little separation of humans, technology, organisations and society.

Systemic models assume that failure and success stem from the same sources and that its component parts can rarely, if at all, describe socio-technical systems. As the often- unchallenged principal that more technology is the key to more production drives the systems we control, environmental and economic efficiency. They become ever more complex and it becomes very difficult to identify linear cause-effect relations. Paths of system behaviours may be invariant to linear interactions or trajectories. This means that it is not possible to observe incidents and accidents in isolation, if we want to improve the systems we try to control. One example of this is when software engineers have to improve a device; they are no longer able to say what exactly is going to happen, when they implement an updated version. The complexity is simply too high. The systemic model assumes that systems work because people learn to identify how technology works, by recognising the actual demands and adjust their performance accordingly, and because they interpret and apply procedures to match current conditions. If this assumption is correct it asks for a more holistic view on safety and the systemic approach requires us to understand the system as a whole instead of by its parts.

2.3. A comparative example of the Linear and Systemic approaches

As an example consider a human on a bicycle and compare safety of this every day activity through the lenses of a linear approach to cycling safety and a systemic approach.

2.3.1. The Linear Model

With a linear model we can easily explain how the mechanical parts of the bicycle works and we can find the problem if there is a problem with tyres, a chain or other parts of the bicycle. We can probably also explain events with a human riding the bicycle under trouble-free conditions, by abstracting the human to a sub-set of the mechanical system. A linear model of this particular system would see the human as a system component.

Consider a cyclist who indicates a change of direction and, in the manoeuvre, is involved in a collision. In linear terms what will be the scope of the analysis? The bicycle will be examined for failures of the physical artefacts of the bicycle; the cyclist will provide his/her account of events and other actors will do so likewise. If all mechanical features of the vehicles that collided are deemed to be functioning normally and/or without defect what remains? The human component is invariably the only remaining system component. The explanation will in all probability explain the event in terms of a human failure – the human failing to see the other vehicle, or not following procedures by failing to indicate. This is typically labelled as human error. Once this has been found as ‘the cause’, then there is no need to go any further. An explanation has been found and the cause, possibly defined as the ‘root cause’, has been ascertained. All can go to bed and sleep knowing this and we can stop a reoccurrence by imploring drivers to be more vigilant to follow the rules of the road or Highway Code.

It is also probable that an explanation will be given of events with a human riding the bicycle under trouble-free conditions. However when considering the human and the bicycle in one of its normal operating environment – on the road network -traffic with a lot of cars, other bicycles, pedestrians, road works, time pressures, in dusk, etc. – it becomes more complex to explain a particular event using the linear model.

2.3.2. The Systemic Approach

The systemic approach differs in many ways, but most notably because it considers the context of the activity being undertaken. When the point or decision is reached in the analysis where human behaviour is considered, the systemic approach does not end , but it is used as a starting point to understand what happened and why decisions made sense to those making them. It strives to understand the local rationality of the human in the system and how the system influences such decisions. The systemic approach may involve exploring the historical trajectory of how decisions were made and the limitations or practicality of the work undertaken.

Thus in the cycling analogy, when a vehicle driver reports that he did not see the other vehicle, this leads to the question why not? If in a car (perhaps the line of sight was obscured by the window architecture of the vehicle itself) the geometry of the road layout was such that the driver had conflicting demands on the limited visual channel of human cognition? Was one of the actors under time pressure that biased perception and altered risk acceptance? Was the vehicle equipped with safety features that generated a style of driving that altered the risk homeostasis but failed making this model of risk inappropriate in this instance? The simple system is no longer simply expressed in these terms.

If explaining events in ‘simple systems’ makes the human the principal failure mode (considering this example is indeed simple in the normal operating environment) in human and bicycle aspects then one must conclude that with more complex systems the linear approach can be seen to have profound limitations. The conclusion, on the basis of the above, is that there is a need for more powerful models to help and account for events in the ATM system.

2.4. The Fundamental discussion

The two approaches to safety are based on fundamentally different concepts.

Linear safety models are based upon:

- Systems can be described and understood in detail

- Cause effect relations

- Traceability

- Removing causes and implementing barriers

- Countermeasures to problems are writing a procedure, training or new technology

Systemic models are based upon:

- Systems can’t be understood and described fully

- Understanding of functions rather than parts

- Failure and success stem from the same sources

- Problems will occur and we will always have to work on the improvements

- Countermeasures to problems are based on how people or organizations cope with complexity

The linear approach to safety has helped to achieve remarkable results. Aviation is one of the safest ways of travel today and linear safety models have played a prominent part in achieving this. However, there are signs that there is no further ability to improve the safety levels achieved today using such methods and philosophies and new approaches are required to make progress. Furthermore, the linear model has a number of limitations and can induce negative consequences that need to be considered. These are known as cultural aspects.

Taking into account that culture is a difficult term with little meaning the, argument is considered in three dimensions:

- Responsibility,

- Language,

- ‘Just culture’.

2.4.1. Responsibility

Linear models and responsibility seem to be a perfect match. Because of the explicit direct linkage between causality and effects, there is also an implicit direct linkage with responsibility of the human operator and system outcomes. This makes the linear model very attractive when one wants to make safety interventions and improvements and remove causes. Significantly it also makes it very attractive for society and organisations too, because the public can see that causes are being removed and that it is again safe to fly when such interventions are made based upon the presumed responsibility, or failure of this. There is therefore a by-product of this way of acting in the apportioning of blame and responsibility. Today it is a lot easier for judicial systems (internal or external) to think of the human operator as errant system components and guilty persons. It is often overlooked or even argued that the allocation of responsibility for accidents and incidents is necessary and a good thing. The argument is that if people are held responsible for their actions they will eventually improve their behaviour and thereby system performance. In daily experience the positive effects of allocating responsibility (if there is any) are overshadowed by the rather negative consequences of individual’s willingness to take responsibility at all levels of the system. Early on it was mainly operators who were the focus of attention after accidents and incidents, but today there is a tendency to focus on managers as well. The pressure produced by the linearity of responsibility results in a huge amount of ‘save your own back’ and ‘tick in the box’ procedures and regulations, which hampers the systems ability to respond to problems that occur.

2.4.2. Language

Another issue that challenges the linear model is the language that it creates. When the focus is on causes and inadequacies, the resulting language that is used can easily be a language restricted to that of personnel shortcomings – the human as errant system component. Too many accident and incident reports include internalised language, speculating motives, attitudes and behaviour. Aviation language is very rich in helping the responsibility argument by expressing error in all kinds of disguises (situational awareness, poor airmanship, etc.). Within organisations in Europe and in the Middle East there has always been a commitment to promote systemic thinking, but in the end it mainly ends up with individuals being stigmatised (which is a nasty way of blaming people) or direct blaming by managers, colleagues or the public. Professionalism implies that professional staff are strong believers that humans do have good intentions and they believe and expect that colleagues, managers and the public do not have the intention to blame individuals, but the result inevitably today ends up very different to these expectations. The emphasis and blame that everyday language puts on personal responsibility and individual shortcomings at all levels of organisations and in the industry is huge and not at all proportionate to what real-life work is about, namely that it takes teamwork (humans, organizations, technology and society) to succeed as well as the fact that it takes teamwork to fail as well (Dekker, 2002).

In contrast to this, the model that underpins resilience (the systemic model) introduces a language of understanding and defining processes on how it works and the strengthening of these processes. Systemic models consider failure and success as two sides of the same coin (Hollnagel, Woods and Leveson, 2006). Failure is the inevitable by-product of striving for success with limited resources (Dekker, 2002). This area hasn’t been explored in depth as yet, but at least it is open to speculation, that upon introducing it, a more positive way of looking what has been achieved and where to look for improvement of systems will be the result.

2.4.3. Just Culture

‘Just Culture’ has been defined as: a culture in which front line operators or others are not punished for actions, omissions or decisions taken by them that are commensurate with their experience and training, but where gross negligence, wilful violations and destructive acts are not tolerated (Eurocontrol, 2008). This is a collection of nice sounding words that we all seemingly can agree upon.

If the spirit of the words are combined in the context of investigations of incidents and accidents there is a tendency to rationalise based on the seductive benefits and utility of the linear model: it therefore becomes difficult to handle the words in the definition. The focus of linear models on individual responsibility and human error logically opens the discussion about whether actions were gross negligent or not. This discussion opens a bias and skewed focus on individuals and their responsibilities and makes it difficult to learn from experience. Gitte Haslebo, a Danish psychologist puts it this way: The desire humans have to learn from personal mistakes is cumbersome in an environment where thinking, discussions in meetings, reward systems, etc. are based on the linear explanation model. Haslbo continues to explain the close connection between the innocent question: ‘what caused the incident’ to ‘who was responsible’. In the quest for learning from mistakes we tend to hamper the learning process with the language we use and the model behind our words. This way our fundamental understanding of how we learn and make progress becomes a hindrance to learning and creates distance between members of the organisation.

Another issue that makes it difficult to report and learn from mistakes is the concept of human error. Society as a whole still prefers to talk about the concept of human error and to play a blame game. Human error is part of societies daily language and can be found in the news, amongst ATCOs, amongst managers, in accident reports, in public statements, etc.

There is in addition another perspective. If ‘human error’ is the cause of events that goes wrong, what is the cause of all other events that go right? The same humans that err are also the ones that are present when things go right. The only possible answer therefore is: humans. However, they behave in the same manner regardless of whether the outcomes of their actions turn out to be positive or negative, simply because they cannot know the outcome at the time that actions are taken. It follows that ‘human error’ should not be used to explain adverse outcomes since it invokes an ad hoc ‘mechanism’. Instead, a more productive view is to try to understand how performance varies, and determine why the behaviour that usually makes things go right occasionally makes things go wrong (Besnard, Hollnagel, 2012).

A humorous footnote about ‘human error’ is that it is a judgment that is always taken with the benefit of hindsight and it is the luxury of humans who operate brittle and high-risk systems (pilots, atcos, bus drivers, technicians, medicals etc.) to have the ability and facility to commit ‘human error’. Rarely if ever is it heard that ‘human error’ has been committed at the level of the manager or in the higher echelons of an organisation.

ATCOs, managers and society must find the way to be able to think in terms of systemic relationships in ATM. This is also the best outcome for ‘just culture’. Today the industry, although not deliberately, is hindering the promotion of systemic analysis of incidents, accidents and safety assessment. Approaches today to these analyses and assessments are all linear based and thereby tend to focus on individual human performance and responsibility – humans as bad apples. The balance between the more systemic findings and focus compared to the focus on human causes needs to change and IFATCA, in close cooperation with other ‘sharp-end’ users Associations, have to be frontrunners in that process. Critique of the system approach is often directed towards accountability and responsibility of the individual manager or operator. In the preceding paragraphs some of the negative effects of using mishaps to increase the awareness of individual responsibility are already mentioned.

Furthermore, the sheer nature of being involved in incidents and accidents as an operator, it is accompanied by an emotional involvement – sleepless nights, loss of confidence and self- esteem, self-blame, etc. – in such a way that there is no need for the organisation or society to trouble the individual any further. If IFATCA adopts the systemic approach then the individual will be involved directly in helping the organisation to understand where sources of risk lie, and thus address them. This form of accountability – prospective accountability – rather than retrospective is a far more productive form and will help organisations in learning from mistakes and focus on allocation of resources that can increase their safety understanding and safety performance.

To sum up the above arguments the following table might help (Dekker, Laursen, 2007):

Role of the individual

Linear: Victim of circumstances who gets blamed for getting into them.

Systemic: Empowered employee able to contribute meaningfully to organisational safety.

Role of manager / staff

Linear: Manager must hear from reporter where s/he went wrong and why and managers can be blamed themselves.

Systemic: Manager and staff focus contextual improvement.

Mechanism for getting at source of risk

Linear: Line organization helps reporter understand that s/he was major source of risk.

Systemic: Reporter helps organisation understand where sources of risk lie in the operation.

Reporting process seen by employees as

Linear: Illegitimate and adversarial non-expert intrusion, possibly career-compromising.

Systemic: Legitimate, expert peer-based, non-prestigious cooperation to create greater safety.

Typical organisational response

Linear: Extra training, removal of single causes or reprimand for the individual.

Systemic: Digging for deeper, systemic areas for improvement and structural changes.

Learning mechanism

Linear: Correcting deviant human elements in the operation.

Systemic: Enhancing system safety by changing work conditions.

Organizational reflex

Linear: Repeat what you did before. Blaming operators stops errors.

Systemic: Keep learning how to learn. You are never sure and never done.

With these arguments in mind, the approach taken by International organisations should be reconsidered and challenged to identify what can be done to promote methods and ideas based on the systemic approach.

2.5. SESAR and safety

SESAR is an ATM development program with emphasis on creating an efficient ATM system to serve society by developing and deploying new tools, extended use of automation to fulfil a new philosophy of how we organise Air Traffic Management (ATM). As yet, SESAR is not an implementation program and therefore there is an emphasis on testing systems before they are in operation. The safety work in SESAR is concerned with how to ensure that systems are safe before implementation. The safety part of SESAR is based on Eurocontrol’s safety assessment method (SAM). In addition to this, the SESAR project management decided to introduce Resilience in to the safety assessment process. Both the linear based approach to safety from Eurocontrol (SAM-methodology) and the systemic approach based Resilience is merged in the Safety Reference Material (SRM) within the program.

IFATCA representatives were able to expose the new “view” approach to safety and the essential requirements attached to it, to the SJU Executive Director. In particular the need for a more forward oriented approach has been proposed to SJU. Particular focus was put on the need to integrate a less linear approach in the transition phase from current research to implementation. As Safety is dealt with in SESAR as a transversal activity efforts have to be made to address the importance to focus on activity undertaken in the Resilience work as the system will be exposed and needs both to be understood and explained through a holistic view to reach the systemic factors. Further possible additional saving in resources for the implementation phases have been addressed as follows:

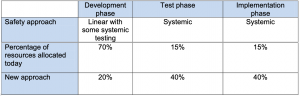

Presenting the allocation of safety assessment resources of today in combination with the suggestion of how to do it tomorrow could graphically look like this:

The numbers presented above are not scientifically derived, but are there to illustrate how to think of the reallocation of recourses and how to understand that there is a need for testing our systems based on a systemic philosophy. This relatively innovative concept of showing the importance to associate the new approach from an economical perspective could in the future be used for any kind of ATM modernisation program (e.g. ASBU, NEXTGEN or similar initiatives).

Conclusions

This paper presents and argues for the need to change the balance between linear and systems approach when the aviation industry considers safety in its operations. This, mainly because of the cultural implications and problems that the linear approach produces but also because the overall system with human, machine and organisation will benefit from improved use of resources, when introducing new concepts and technology.

The paper uses the experiences IFATCA has gained thanks to the involvement in SESAR. No experience or involvement of similar kind was available to the Global Safety Team at the time of writing this paper.

Recommendations

It is recommended that IFATCA:

4.1. Promotes reduction in the use of linear models, recognising that there is a place for these models at the outset;

4.2. Adopts the systems approach to safety as its preferred conception of safety, and reviews and amends the relevant polices and statements accordingly;

4.3. Calls upon the Executive Board i.c. EVPP, EVPT and Global safety team Coordinator to undertake this review and propose changes by conference 2015;

4.4. Tasks the Global Safety Team to publish a position paper on the adoption of the systems approach to all formal bodies;

4.5. Regional meetings in 2013 ensure that their agenda ́s will contain extensions to discuss and brief the systemic approach to safety;

4.6. Works with other agencies, in particular with other Associations representing front/end users of the (ATM) system, to develop practical applications of the systemic approach;

4.7. Directs the ICAO Safety Panel, and ICAO representative to actively pursue the adoption for an explicit systemic approach to safety in all ICAO activities, focussing in particular at ASBU and GASP.

References

Besnard, D., Hollnagel, E.. I want to believe: Some myths about the management of industrial safety. Cognition, Technology & Work. Available at https://www.springerlink.com.

Dekker, S. (2002). The Field Guide to Human Error Investigations: Linkoping, S: Ashgate.

Dekker, S.W.A, Laursen, T. (2007): From punitive action to confidential reporting: A longitudinal study of organizational learning from incidents. Patient Safety & Quality Healthcare, www.psqh.com.

Dekker, S.W. A, Woods, D.D. (2002). MABA-MABA or Abracadabra: Progress on Human- Automation Coordination. Cognition, Technology & Work (2002) 4:240–244.

Garray, G. G. (2012): Safety and Resilience: Presented at the Sim-Trans meeting at Naviar DK, www.simtrans.dk.

Hollnagel, E., Woods, D. D., & Leveson, N. (2006). Resilience Engineering: Concepts and Precepts. Engineering. Ashgate.

Eurocontrol (2008). Just Culture Guidance Material for Interfacing with the media. Eurocontrol.

Fitts PM (1951). Human engineering for an effective air navigation and traffic control system. National Research Council, Washington, DC.

Haslebo, G (2004). Relationer I organisationer, en verden til forskel. Dansk Psykologisk Forlag.

Hollnagel, E., (2012). From Reactive to Proactive Safety Management. DFS safety Letter 2012.

Leveson, N. (2011). Engineering a Safer World: Systems thinking Applied to Safety. Cambridge, Massachusetts, The MIT Press.

Ramo, Simon, 1973. The systems approach. In Ralph, F., Miles, Jr. (Eds), Systems Concepts: Lectures on Contemporary Approaches to Systems. New York, John F. Wiley & Sons, pp 13-32.

Reason, J. (1991). Human Error. Cambridge, Cambs; Cambridge University Press.

Reason, J. (1993). Managing the Risks of Organizational Accidents. Aldershot, Hants: Ashgate Publishing.

Silbey, S. (2009). Taming Prometheus: Talk About Safety and Culture. Annual Review of Sociology, 35, 341 – 69.